- ML - Home

- ML - Introduction

- ML - Getting Started

- ML - Basic Concepts

- ML - Ecosystem

- ML - Python Libraries

- ML - Applications

- ML - Life Cycle

- ML - Required Skills

- ML - Implementation

- ML - Challenges & Common Issues

- ML - Limitations

- ML - Reallife Examples

- ML - Data Structure

- ML - Mathematics

- ML - Artificial Intelligence

- ML - Neural Networks

- ML - Deep Learning

- ML - Getting Datasets

- ML - Categorical Data

- ML - Data Loading

- ML - Data Understanding

- ML - Data Preparation

- ML - Models

- ML - Supervised Learning

- ML - Unsupervised Learning

- ML - Semi-supervised Learning

- ML - Reinforcement Learning

- ML - Supervised vs. Unsupervised

- Machine Learning Data Visualization

- ML - Data Visualization

- ML - Histograms

- ML - Density Plots

- ML - Box and Whisker Plots

- ML - Correlation Matrix Plots

- ML - Scatter Matrix Plots

- Statistics for Machine Learning

- ML - Statistics

- ML - Mean, Median, Mode

- ML - Standard Deviation

- ML - Percentiles

- ML - Data Distribution

- ML - Skewness and Kurtosis

- ML - Bias and Variance

- ML - Hypothesis

- Regression Analysis In ML

- ML - Regression Analysis

- ML - Linear Regression

- ML - Simple Linear Regression

- ML - Multiple Linear Regression

- ML - Polynomial Regression

- Classification Algorithms In ML

- ML - Classification Algorithms

- ML - Logistic Regression

- ML - K-Nearest Neighbors (KNN)

- ML - Naïve Bayes Algorithm

- ML - Decision Tree Algorithm

- ML - Support Vector Machine

- ML - Random Forest

- ML - Confusion Matrix

- ML - Stochastic Gradient Descent

- Clustering Algorithms In ML

- ML - Clustering Algorithms

- ML - Centroid-Based Clustering

- ML - K-Means Clustering

- ML - K-Medoids Clustering

- ML - Mean-Shift Clustering

- ML - Hierarchical Clustering

- ML - Density-Based Clustering

- ML - DBSCAN Clustering

- ML - OPTICS Clustering

- ML - HDBSCAN Clustering

- ML - BIRCH Clustering

- ML - Affinity Propagation

- ML - Distribution-Based Clustering

- ML - Agglomerative Clustering

- Dimensionality Reduction In ML

- ML - Dimensionality Reduction

- ML - Feature Selection

- ML - Feature Extraction

- ML - Backward Elimination

- ML - Forward Feature Construction

- ML - High Correlation Filter

- ML - Low Variance Filter

- ML - Missing Values Ratio

- ML - Principal Component Analysis

- Reinforcement Learning

- ML - Reinforcement Learning Algorithms

- ML - Exploitation & Exploration

- ML - Q-Learning

- ML - REINFORCE Algorithm

- ML - SARSA Reinforcement Learning

- ML - Actor-critic Method

- ML - Monte Carlo Methods

- ML - Temporal Difference

- Deep Reinforcement Learning

- ML - Deep Reinforcement Learning

- ML - Deep Reinforcement Learning Algorithms

- ML - Deep Q-Networks

- ML - Deep Deterministic Policy Gradient

- ML - Trust Region Methods

- Quantum Machine Learning

- ML - Quantum Machine Learning

- ML - Quantum Machine Learning with Python

- Machine Learning Miscellaneous

- ML - Performance Metrics

- ML - Automatic Workflows

- ML - Boost Model Performance

- ML - Gradient Boosting

- ML - Bootstrap Aggregation (Bagging)

- ML - Cross Validation

- ML - AUC-ROC Curve

- ML - Grid Search

- ML - Data Scaling

- ML - Train and Test

- ML - Association Rules

- ML - Apriori Algorithm

- ML - Gaussian Discriminant Analysis

- ML - Cost Function

- ML - Bayes Theorem

- ML - Precision and Recall

- ML - Adversarial

- ML - Stacking

- ML - Epoch

- ML - Perceptron

- ML - Regularization

- ML - Overfitting

- ML - P-value

- ML - Entropy

- ML - MLOps

- ML - Data Leakage

- ML - Monetizing Machine Learning

- ML - Types of Data

- Machine Learning - Resources

- ML - Quick Guide

- ML - Cheatsheet

- ML - Interview Questions

- ML - Useful Resources

- ML - Discussion

Exploitation and Exploration in Machine Learning

In machine learning, exploration is the action of allowing an agent to discover new features about the environment, while exploitation is making the agent stick to the existing knowledge gained. If the agent continuously exploits past experiences, it likely gets stuck. On the other hand, if it continues to explore, it might never find a good policy, which results in exploration-exploitation dilemma.

Exploitation in Machine Learning

Exploitation is a strategy in reinforcement learning that an agent leverages to make decisions in a state from the existing knowledge to maximize the expected reward. The goal of exploitation is utilizing what is already known about the environment to achieve the best outcome.

Key Aspects of Exploitation

The key aspects of exploitation include −

- Maximizing reward − The main objective of exploitation is maximizing the expected reward based on the current understanding of the environment. This involves choosing an action based on learned values and rewards that would yield the highest outcome.

- Improving the efficiency of decision − Exploitation helps in making efficient decisions, especially by focusing on high-reward actions, which reduce the computational cost of performing exploration.

- Risk Management − Exploitation inherently has a low level of risk as it focuses more on tried and tested actions, reducing the uncertainty associated with less familiar choices.

Exploration in Machine Learning

Exploration is an action that enables agents to gain knowledge about the environment or model. The exploration process chooses actions with unpredictable results to collect information about the states and rewards that the performed actions will result in.

Key Aspects of Exploration

The key aspects of exploration include −

- Gaining information − The main objective of exploration is to allow an agent to gather information by performing new actions in a state that can improve understanding of the model or environment.

- Reduction of Uncertainty − The main objective of exploration is to allow an agent to gather information by performing new actions in a state that can improve understanding of the model or environment.

- State space coverage − In specific models that include extensive or continuous state spaces, exploration ensures that a sufficient variety of regions in the state space are visited to prevent learning that is biased towards a small number of experiences.

Action Selection

The objective of reinforcement learning is to teach the agent how to behave under various states. The agent learns what actions to perform during the training process using various approaches like greedy action selection, epsilon-greedy action selection, upper confidence bound action selection, etc.

Exploration Vs. Exploitation Tradeoff

The idea of using the agent's existing knowledge versus trying a random action is called the exploitation-exploration trade-off. When the agent explores, it can enhance its existing knowledge and achieve improvement over time. In the other case, if it uses the existing knowledge, it receives a greater reward right away. Since the agent cannot perform both tasks simultaneously, there is a compromise.

The distribution of resources should depend on the requirements of both streams, alternating based on the current state and the complexity of the learning task.

Techniques for Balancing Exploration and Exploitation

The following are some techniques for balancing exploration and exploitation in reinforcement learning −

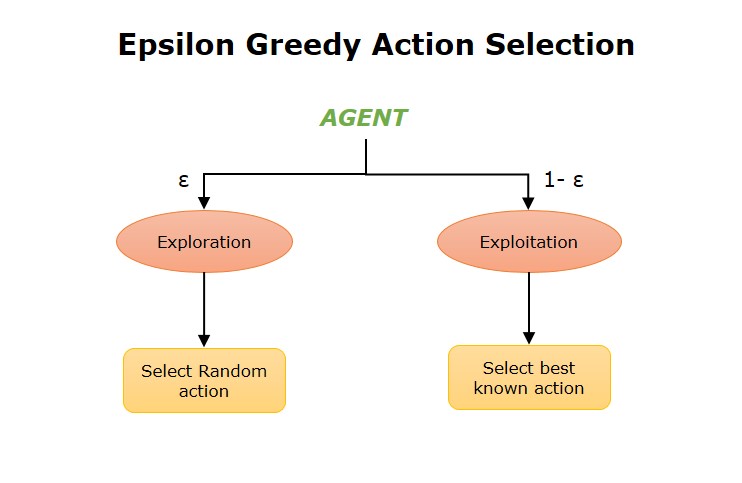

Epsilon-Greedy Action Selection

In reinforcement learning, the agent usually selects an action based on its reward. The agent always chooses the optimal action to generate the maximum reward possible for the given state. In Epsilon-Greedy action selection, the agent uses both exploitation to gain insights from the prior knowledge and exploration to look for new options.

The epsilon-greedy method usually chooses the action with the highest expected reward. The goal is to achieve a balance between exploration and exploitation. With the small probability of ε, we opt to explore instead of exploiting what the agent has learned so far.

Multi-Armed Bandit Frameworks

The multi-armed bandit framework provides a formal bases for managing the balance between exploration and exploitation in sequential decision-making problems. They offer algorithms that analyze the trade-off between exploration and exploitation based on various reward systems and circumstances.

Upper Confidence Bound

The Upper Confidence Bound (UCB) is a popular algorithm for balancing exploration and exploitation in reinforcement learning. This algorithm is based on the principle of optimism in the face of uncertainty. It chooses actions that optimize the upper confidence limit of the expected reward. This indicates that it takes into account both the mean reward of an action and the uncertainty or variability in that reward.