Strawberry fields forever will exist for the in-demand fruit, but the laborers who do the backbreaking work of harvesting them might continue to dwindle. While raised, high-bed cultivation somewhat eases the manual labor, the need for robots to help harvest strawberries, tomatoes, and other such produce is apparent.

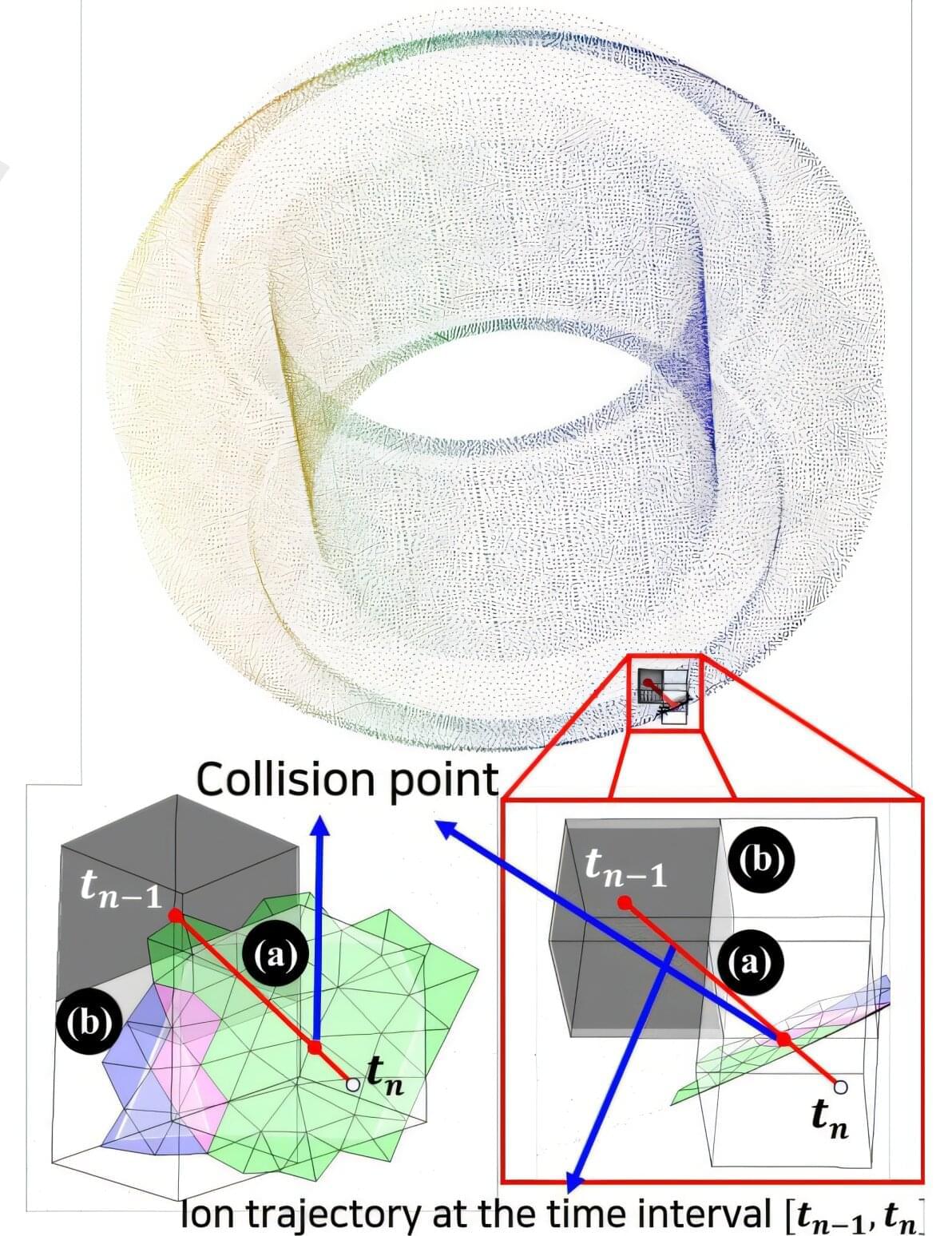

As a first step, Osaka Metropolitan University Assistant Professor Takuya Fujinaga has developed an algorithm for robots to autonomously drive in two modes: moving to a pre-designated destination and moving alongside raised cultivation beds. The Graduate School of Engineering researcher experimented with an agricultural robot that utilizes lidar point cloud data to map the environment.

Official website for Osaka Metropolitan University. Established in 2022 through the merger of Osaka City University and Osaka Prefecture University.