Portal:Computer programming

Portal maintenance status:(September 2019)

|

The Computer Programming Portal

Computer programming or coding is the composition of sequences of instructions, called programs, that computers can follow to perform tasks. It involves designing and implementing algorithms, step-by-step specifications of procedures, by writing code in one or more programming languages. Programmers typically use high-level programming languages that are more easily intelligible to humans than machine code, which is directly executed by the central processing unit. Proficient programming usually requires expertise in several different subjects, including knowledge of the application domain, details of programming languages and generic code libraries, specialized algorithms, and formal logic.

Auxiliary tasks accompanying and related to programming include analyzing requirements, testing, debugging (investigating and fixing problems), implementation of build systems, and management of derived artifacts, such as programs' machine code. While these are sometimes considered programming, often the term software development is used for this larger overall process – with the terms programming, implementation, and coding reserved for the writing and editing of code per se. Sometimes software development is known as software engineering, especially when it employs formal methods or follows an engineering design process. (Full article...)

Selected articles - load new batch

- Image 1

.svg/250px-Microsoft_logo_(2012).svg.png)

Logo since August 17, 2012

Microsoft is a multinational computer technology corporation. Microsoft was founded on April 4, 1975, by Bill Gates and Paul Allen in Albuquerque, New Mexico. Its current best-selling products are the Microsoft Windowsoperating system; Microsoft Office, a suite of productivity software; Xbox, a line of entertainment of games, music, and video; Bing, a line of search engines; and Microsoft Azure, a cloud services platform.

In 1980, Microsoft formed a partnership with IBM to bundle Microsoft's operating system with IBM computers; with that deal, IBM paid Microsoft a royalty for every sale. In 1985, IBM requested Microsoft to develop a new operating system for their computers called OS/2. Microsoft produced that operating system, but also continued to sell their own alternative, which proved to be in direct competition with OS/2. Microsoft Windows eventually overshadowed OS/2 in terms of sales. When Microsoft launched several versions of Microsoft Windows in the 1990s, they had captured over 90% market share of the world's personal computers.

As of June 30, 2015, Microsoft has a global annual revenue of US$86.83 billion (~$109 billion in 2023) and 128,076 employees worldwide. It develops, manufactures, licenses, and supports a wide range of software products for computing devices. (Full article...) - Image 2In C++computer programming, allocators are a component of the C++ Standard Library. The standard library provides several data structures, such as list and set, commonly referred to as containers. A common trait among these containers is their ability to change size during the execution of the program. To achieve this, some form of dynamic memory allocation is usually required. Allocators handle all the requests for allocation and deallocation of memory for a given container. The C++ Standard Library provides general-purpose allocators that are used by default, however, custom allocators may also be supplied by the programmer.

Allocators were invented by Alexander Stepanov as part of the Standard Template Library (STL). They were originally intended as a means to make the library more flexible and independent of the underlying memory model, allowing programmers to utilize custom pointer and reference types with the library. However, in the process of adopting STL into the C++ standard, the C++ standardization committee realized that a complete abstraction of the memory model would incur unacceptable performance penalties. To remedy this, the requirements of allocators were made more restrictive. As a result, the level of customization provided by allocators is more limited than was originally envisioned by Stepanov.

Nevertheless, there are many scenarios where customized allocators are desirable. Some of the most common reasons for writing custom allocators include improving performance of allocations by using memory pools, and encapsulating access to different types of memory, like shared memory or garbage-collected memory. In particular, programs with many frequent allocations of small amounts of memory may benefit greatly from specialized allocators, both in terms of running time and memory footprint. (Full article...) - Image 3

Ada is a structured, statically typed, imperative, and object-orientedhigh-level programming language, inspired by Pascal and other languages. It has built-in language support for design by contract (DbC), extremely strong typing, explicit concurrency, tasks, synchronous message passing, protected objects, and non-determinism. Ada improves code safety and maintainability by using the compiler to find errors in favor of runtime errors. Ada is an internationaltechnical standard, jointly defined by the International Organization for Standardization (ISO), and the International Electrotechnical Commission (IEC). As of May 2023[update], the standard, ISO/IEC 8652:2023, is called Ada 2022 informally.

Ada was originally designed by a team led by French computer scientistJean Ichbiah of Honeywell under contract to the United States Department of Defense (DoD) from 1977 to 1983 to supersede over 450 programming languages then used by the DoD. Ada was named after Ada Lovelace (1815–1852), who has been credited as the first computer programmer. (Full article...) - Image 4

The Greek lowercase omega (ω) character is used to represent Null in database theory.

In SQL, null or NULL is a special marker used to indicate that a data value does not exist in the database. Introduced by the creator of the relational database model, E. F. Codd, SQL null serves to fulfill the requirement that all true relational database management systems (RDBMS) support a representation of "missing information and inapplicable information". Codd also introduced the use of the lowercase Greek omega (ω) symbol to represent null in database theory. In SQL,NULLis a reserved word used to identify this marker.

A null should not be confused with a value of 0. A null indicates a lack of a value, which is not the same as a zero value. For example, consider the question "How many books does Adam own?" The answer may be "zero" (we know that he owns none) or "null" (we do not know how many he owns). In a database table, the column reporting this answer would start with no value (marked by null), and it would not be updated with the value zero until it is ascertained that Adam owns no books.

In SQL, null is a marker, not a value. This usage is quite different from most programming languages, where a null value of a reference means it is not pointing to any object. (Full article...) - Image 5

Scala (/ˈskɑːlɑː/SKAH-lah) is a strongstatically typedhigh-levelgeneral-purpose programming language that supports both object-oriented programming and functional programming. Designed to be concise, many of Scala's design decisions are intended to address criticisms of Java.

Scala source code can be compiled to Java bytecode and run on a Java virtual machine (JVM). Scala can also be transpiled to JavaScript to run in a browser, or compiled directly to a native executable. When running on the JVM, Scala provides language interoperability with Java so that libraries written in either language may be referenced directly in Scala or Java code. Like Java, Scala is object-oriented, and uses a syntax termed curly-brace which is similar to the language C. Since Scala 3, there is also an option to use the off-side rule (indenting) to structure blocks, and its use is advised. Martin Odersky has said that this turned out to be the most productive change introduced in Scala 3.

Unlike Java, Scala has many features of functional programming languages (like Scheme, Standard ML, and Haskell), including currying, immutability, lazy evaluation, and pattern matching. It also has an advanced type system supporting algebraic data types, covariance and contravariance, higher-order types (but not higher-rank types), anonymous types, operator overloading, optional parameters, named parameters, raw strings, and an experimental exception-only version of algebraic effects that can be seen as a more powerful version of Java's checked exceptions. (Full article...) - Image 6

PDP-11 CPU board

Computer hardware includes the physical parts of a computer, such as the central processing unit (CPU), random access memory (RAM), motherboard, computer data storage, graphics card, sound card, and computer case. It includes external devices such as a monitor, mouse, keyboard, and speakers.

By contrast, software is a set of written instructions that can be stored and run by hardware. Hardware derived its name from the fact it is hard or rigid with respect to changes, whereas software is soft because it is easy to change.

Hardware is typically directed by the software to execute any command or instruction. A combination of hardware and software forms a usable computing system, although other systems exist with only hardware. (Full article...) - Image 7

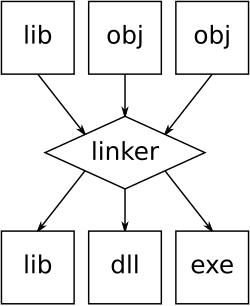

An illustration of the linking process. Object files and static libraries are assembled into a new library or executable

A linker or link editor is a computer program that combines intermediate software build files such as object and library files into a single executable file such as a program or library. A linker is often part of a toolchain that includes a compiler and/or assembler that generates intermediate files that the linker processes. The linker may be integrated with other toolchain tools such that the user does not interact with the linker directly.

A simpler version that writes its output directly to memory is called the loader, though loading is typically considered a separate process. (Full article...) - Image 8

Go is a high-levelgeneral purpose programming language that is statically typed and compiled. It is known for the simplicity of its syntax and the efficiency of development that it enables by the inclusion of a large standard library supplying many needs for common projects. It was designed at Google in 2007 by Robert Griesemer, Rob Pike, and Ken Thompson, and publicly announced in November of 2009. It is syntactically similar to C, but also has memory safety, garbage collection, structural typing, and CSP-style concurrency. It is often referred to as Golang to avoid ambiguity and because of its former domain name,golang.org, but its proper name is Go.

There are two major implementations:- The original, self-hostingcompilertoolchain, initially developed inside Google;

- A frontend written in C++, called gofrontend, originally a GCC frontend, providing gccgo, a GCC-based Go compiler; later extended to also support LLVM, providing an LLVM-based Go compiler called gollvm.

- Image 9Computer programming or coding is the composition of sequences of instructions, called programs, that computers can follow to perform tasks. It involves designing and implementing algorithms, step-by-step specifications of procedures, by writing code in one or more programming languages. Programmers typically use high-level programming languages that are more easily intelligible to humans than machine code, which is directly executed by the central processing unit. Proficient programming usually requires expertise in several different subjects, including knowledge of the application domain, details of programming languages and generic code libraries, specialized algorithms, and formal logic.

Auxiliary tasks accompanying and related to programming include analyzing requirements, testing, debugging (investigating and fixing problems), implementation of build systems, and management of derived artifacts, such as programs' machine code. While these are sometimes considered programming, often the term software development is used for this larger overall process – with the terms programming, implementation, and coding reserved for the writing and editing of code per se. Sometimes software development is known as software engineering, especially when it employs formal methods or follows an engineering design process. (Full article...) - Image 10

Large supercomputers such as IBM's Blue Gene/P are designed to heavily exploit parallelism.

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling. As power consumption (and consequently heat generation) by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.

In computer science, parallelism and concurrency are two different things: a parallel program uses multiple CPU cores, each core performing a task independently. On the other hand, concurrency enables a program to deal with multiple tasks even on a single CPU core; the core switches between tasks (i.e. threads) without necessarily completing each one. A program can have both, neither or a combination of parallelism and concurrency characteristics.

Parallel computers can be roughly classified according to the level at which the hardware supports parallelism, with multi-core and multi-processor computers having multiple processing elements within a single machine, while clusters, MPPs, and grids use multiple computers to work on the same task. Specialized parallel computer architectures are sometimes used alongside traditional processors, for accelerating specific tasks. (Full article...) - Image 11

Eiffel is an object-orientedprogramming language designed by Bertrand Meyer (an object-orientation proponent and author of Object-Oriented Software Construction) and Eiffel Software. Meyer conceived the language in 1985 with the goal of increasing the reliability of commercial software development. The first version was released in 1986. In 2005, the International Organization for Standardization (ISO) released a technical standard for Eiffel.

The design of the language is closely connected with the Eiffel programming method. Both are based on a set of principles, including design by contract, command–query separation, the uniform-access principle, the single-choice principle, the open–closed principle, and option–operand separation.

Many concepts initially introduced by Eiffel were later added into Java, C#, and other languages. New language design ideas, particularly through the Ecma/ISO standardization process, continue to be incorporated into the Eiffel language. (Full article...) - Image 12

Haskell (/ˈhæskəl/) is a general-purpose, statically typed, purely functionalprogramming language with type inference and lazy evaluation. Designed for teaching, research, and industrial applications, Haskell pioneered several programming language features such as type classes, which enable type-safeoperator overloading, and monadicinput/output (IO). It is named after logicianHaskell Curry. Haskell's main implementation is the Glasgow Haskell Compiler (GHC).

Haskell's semantics are historically based on those of the Miranda programming language, which served to focus the efforts of the initial Haskell working group. The last formal specification of the language was made in July 2010, while the development of GHC continues to expand Haskell via language extensions.

Haskell is used in academia and industry. As of May 2021[update], Haskell was the 28th most popular programming language by Google searches for tutorials, and made up less than 1% of active users on the GitHub source code repository. (Full article...) - Image 13SNOBOL ("StriNg Oriented and symBOlic Language") is a series of programming languages developed between 1962 and 1967 at AT&TBell Laboratories by David J. Farber, Ralph Griswold and Ivan P. Polonsky, culminating in SNOBOL4. It was one of a number of text-string-oriented languages developed during the 1950s and 1960s; others included COMIT and TRAC. Despite the similar name, it is entirely unlike COBOL.

SNOBOL4 stands apart from most programming languages of its era by having patterns as a first-class data type, a data type whose values can be manipulated in all ways permitted to any other data type in the programming language, and by providing operators for pattern concatenation and alternation. SNOBOL4 patterns are a type of object and admit various manipulations, much like later object-oriented languages such as JavaScript whose patterns are known as regular expressions. In addition SNOBOL4 strings generated during execution can be treated as programs and either interpreted or compiled and executed (as in the eval function of other languages).

SNOBOL4 was quite widely taught in larger U.S. universities in the late 1960s and early 1970s and was widely used in the 1970s and 1980s as a text manipulation language in the humanities. (Full article...) - Image 14

W3sDesign Interpreter Design Pattern UML

In computer science, an interpreter is a computer program that directly executes instructions written in a programming or scripting language, without requiring them previously to have been compiled into a machine language program. An interpreter generally uses one of the following strategies for program execution:- Parse the source code and perform its behavior directly;

- Translate source code into some efficient intermediate representation or object code and immediately execute that;

- Explicitly execute stored precompiled bytecode made by a compiler and matched with the interpreter's virtual machine.

Early versions of Lisp programming language and minicomputer and microcomputer BASIC dialects would be examples of the first type. Perl, Raku, Python, MATLAB, and Ruby are examples of the second, while UCSD Pascal is an example of the third type. Source programs are compiled ahead of time and stored as machine independent code, which is then linked at run-time and executed by an interpreter and/or compiler (for JIT systems). Some systems, such as Smalltalk and contemporary versions of BASIC and Java, may also combine two and three types. Interpreters of various types have also been constructed for many languages traditionally associated with compilation, such as Algol, Fortran, Cobol, C and C++. (Full article...) - Image 15

Augusta Ada King, Countess of Lovelace (néeByron; 10 December 1815 – 27 November 1852), also known as Ada Lovelace, was an English mathematician and writer chiefly known for her work on Charles Babbage's proposed mechanical general-purpose computer, the Analytical Engine. She was the first to recognise that the machine had applications beyond pure calculation.

Lovelace was the only legitimate child of poet Lord Byron and reformer Anne Isabella Milbanke. All her half-siblings, Lord Byron's other children, were born out of wedlock to other women. Lord Byron separated from his wife a month after Ada was born and left England forever. He died in Greece when she was eight. Lady Byron was anxious about her daughter's upbringing and promoted Lovelace's interest in mathematics and logic in an effort to prevent her from developing her father's perceived insanity. Despite this, Lovelace remained interested in her father, naming her two sons Byron and Gordon. Upon her death, she was buried next to her father at her request. Although often ill in her childhood, Lovelace pursued her studies assiduously. She married William King in 1835. King was made Earl of Lovelace in 1838, Ada thereby becoming Countess of Lovelace.

Lovelace's educational and social exploits brought her into contact with scientists such as Andrew Crosse, Charles Babbage, Sir David Brewster, Charles Wheatstone and Michael Faraday, and the author Charles Dickens, contacts which she used to further her education. Lovelace described her approach as "poetical science" and herself as an "Analyst (& Metaphysician)". (Full article...)

Selected images

- Image 1A view of the GNU nano Text editor version 6.0

- Image 2Output from a (linearised) shallow water equation model of water in a bathtub. The water experiences 5 splashes which generate surface gravity waves that propagate away from the splash locations and reflect off of the bathtub walls.

- Image 3Deep Blue was a chess-playingexpert system run on a unique purpose-built IBMsupercomputer. It was the first computer to win a game, and the first to win a match, against a reigning world champion under regular time controls. Photo taken at the Computer History Museum.

- Image 4Margaret Hamilton standing next to the navigation software that she and her MIT team produced for the Apollo Project.

- Image 5Partial map of the Internet based on the January 15, 2005 data found on opte.org. Each line is drawn between two nodes, representing two IP addresses. The length of the lines are indicative of the delay between those two nodes. This graph represents less than 30% of the Class C networks reachable by the data collection program in early 2005.

- Image 7A head crash on a modern hard disk drive

- Image 8An IBM Port-A-Punch punched card

- Image 10A lone house. An image made using Blender 3D.

- Image 11Grace Hopper at the UNIVAC keyboard, c. 1960. Grace Brewster Murray: American mathematician and rear admiral in the U.S. Navy who was a pioneer in developing computer technology, helping to devise UNIVAC I. the first commercial electronic computer, and naval applications for COBOL (common-business-oriented language).

- Image 12This image (when viewed in full size, 1000 pixels wide) contains 1 million pixels, each of a different color.

- Image 13GNOME Shell, GNOME Clocks, Evince, gThumb and GNOME Files at version 3.30, in a dark theme

- Image 15Ada Lovelace was an English mathematician and writer, chiefly known for her work on Charles Babbage's proposed mechanical general-purpose computer, the Analytical Engine. She was the first to recognize that the machine had applications beyond pure calculation, and to have published the first algorithm intended to be carried out by such a machine. As a result, she is often regarded as the first computer programmer.

- Image 17Stephen Wolfram is a British-American computer scientist, physicist, and businessman. He is known for his work in computer science, mathematics, and in theoretical physics.

- Image 18Partial view of the Mandelbrot set. Step 1 of a zoom sequence: Gap between the "head" and the "body" also called the "seahorse valley".

Did you know? - load more entries

- ... that both Thackeray and Longfellow bought paintings by Fanny Steers?

- ... that the Gale–Shapley algorithm was used to assign medical students to residencies long before its publication by Gale and Shapley?

- ... that a pink skin for Mercy in the video game Overwatch helped raise more than $12 million for breast cancer research?

- ... that the programming language Acorn System BASIC was so non-standard that one commenter suggested that using it on the BBC Micro would be a disaster?

- ... that Phil Fletcher as Hacker T. Dog caused Lauren Layfield to make the "most famous snort" in the United Kingdom in 2016?

- ... that NATO was once targeted by a group of "gay furry hackers"?

Subcategories

WikiProjects

- There are many users interested in computer programming, join them.

Computer programming news

No recent news

Topics

Related portals

Associated Wikimedia

The following Wikimedia Foundation sister projects provide more on this subject:

- Commons

Free media repository - Wikibooks

Free textbooks and manuals - Wikidata

Free knowledge base - Wikinews

Free-content news - Wikiquote

Collection of quotations - Wikisource

Free-content library - Wikiversity

Free learning tools - Wiktionary

Dictionary and thesaurus

.png/250px-Ada_Byron_daguerreotype_by_Antoine_Claudet_1843_or_1850_-_cropped_(cropped).png)

_in_Dark_theme.png/120px-GNOME_Shell,_GNOME_Clocks,_Evince,_gThumb,_GNOME_Files_at_version_3.30_(2018-09)_in_Dark_theme.png)