Presentation database on flash

0 likes395 views

Download & Share Technology Presentations http://ouo.io/XKLUj Student Guide & Best http://ouo.io/8u1RP

1 of 35

![Matching IO Intensity to Storage Tier • Object Intensity = Object-IOPS / Object-GB • Match Object Intensity with Storage Tier • COST[tier] = MAX(Object IOPS * $/IOPS[tier], Object-GB * $/GB[tier]) • Tier Intensity[tier] = $/GB[tier] / $/IOPS[tier] • If Object-Intensity > Tier-Intensity then cost of object in this tier is IOPS bound • Otherwise cost of object in this tier is Capacity bound • Optimize: • If cost is IOPS bound, compare with lower (cheaper IOPS) tier • if cost is Capacity bound, compare with upper (cheaper capacity) tier](https://image.slidesharecdn.com/presentation-databaseonflash-150728034917-lva1-app6891/85/Presentation-database-on-flash-22-320.jpg)

![Tier Intensity Cut Offs • Tier Intensity = $/GB / $/IOPS • Hi Cap Disk Intensity = ($250 / 1000GB) / ($250 / 100 IOPS) = 1/10 • Fast Disk Intensity = ($600 / 300GB) / ($600 / 300 IOPS) = 1 • Flash Intensity = ($2400 / 80GB) / ($2400 / 30K IOPS) = 375 • If Object Intensity is > 375: Choose Flash • If Object Intensity is between 1 and 375 • Break even when Intensity is $/GB[Flash] / $/IOPS[Fast-Disk] = ($2400 / 80GB) / ($600 / 300 IOPS) = 15 • If Object Intensity is between 1/10 and 1: • Break even when Intensity is $/GB[Fast-Disk] / $/IOPS[HC-Disk] = ($600 / 300 GB) / ($250 / 100 IOPS) = 0.8 • If Object Intensity is < 1/10: Choose High Capacity Disk](https://image.slidesharecdn.com/presentation-databaseonflash-150728034917-lva1-app6891/85/Presentation-database-on-flash-23-320.jpg)

Recommended

Colvin exadata mistakes_ioug_2014

Colvin exadata mistakes_ioug_2014marvin herrera This document discusses common mistakes made when implementing Oracle Exadata systems. It describes improperly sized SGAs which can hurt performance on data warehouses. It also discusses issues like not using huge pages, over or under use of indexing, too much parallelization, selecting the wrong disk types, failing to patch systems, and not implementing tools like Automatic Service Request and exachk. The document provides guidance on optimizing these areas to get the best performance from Exadata.

Why does my choice of storage matter with cassandra?

Why does my choice of storage matter with cassandra?Johnny Miller The document discusses how the choice of storage is critical for Cassandra deployments. It summarizes that SSDs are generally the best choice as they have no moving parts, resulting in much faster performance compared to HDDs. Specifically, SSDs can eliminate issues caused by disk seeks and allow the use of compaction strategies like leveled compaction that require lower seek times. The document provides measurements showing SSDs are up to 100x faster than HDDs for read/write speeds and latency. It recommends choosing local SSD storage in a JBOD configuration when possible for best performance and manageability.

CaSSanDra: An SSD Boosted Key-Value Store

CaSSanDra: An SSD Boosted Key-Value StoreTilmann Rabl This presentation was held by Prashanth Menon at ICDE '14 on April 3, 2014 in Chicago, IL, USA. The full paper and additional information is available at: http://msrg.org/papers/Menon2013 Abstract: With the ever growing size and complexity of enterprise systems there is a pressing need for more detailed application performance management. Due to the high data rates, traditional database technology cannot sustain the required performance. Alternatives are the more lightweight and, thus, more performant key-value stores. However, these systems tend to sacrifice read performance in order to obtain the desired write throughput by avoiding random disk access in favor of fast sequential accesses. With the advent of SSDs, built upon the philosophy of no moving parts, the boundary between sequential vs. random access is now becoming blurred. This provides a unique opportunity to extend the storage memory hierarchy using SSDs in key-value stores. In this paper, we extensively evaluate the benefits of using SSDs in commercialized key-value stores. In particular, we investigate the performance of hybrid SSD-HDD systems and demonstrate the benefits of our SSD caching and our novel dynamic schema model.

Cassandra Day SV 2014: Designing Commodity Storage in Apache Cassandra

Cassandra Day SV 2014: Designing Commodity Storage in Apache CassandraDataStax Academy As we move into the world of Big Data and the Internet of Things, the systems architectures and data models we've relied on for decades are becoming a hindrance. At the core of the problem is the read-modify-write cycle. In this session, Al will talk about how to build systems that don't rely on RMW, with a focus on Cassandra. Finally, for those times when RMW is unavoidable, he will cover how and when to use Cassandra's lightweight transactions and collections.

Deploying ssd in the data center 2014

Deploying ssd in the data center 2014Howard Marks This document discusses various options for deploying solid state drives (SSDs) in the data center to address storage performance issues. It describes all-flash arrays that use only SSDs, hybrid arrays that combine SSDs and hard disk drives, and server-side flash caching. Key points covered include the performance benefits of SSDs over HDDs, different types of SSDs, form factors, deployment architectures like all-flash arrays from vendors, hybrid arrays, server-side caching software, virtual storage appliances, and hyperconverged infrastructure systems. Choosing the best solution depends on factors like performance needs, capacity, data services required, and budget.

V mware virtual san 5.5 deep dive

V mware virtual san 5.5 deep divesolarisyougood Virtual SAN 5.5 provides a technical deep dive into VMware's Virtual SAN software-defined storage technology. Key points include: - Virtual SAN runs on standard x86 servers and provides a policy-based management framework and high performance flash architecture. - It delivers scale of up to 32 hosts, 3,200 VMs, 4.4 petabytes, and 2 million IOPS. - Virtual SAN is integrated with VMware technologies like vMotion, vSphere HA, and vSphere replication and simplifies storage management. - It offers flexible configurations, granular scaling, and reduces both capital and operating expenses for improved total cost of ownership.

NGENSTOR_ODA_HPDA

NGENSTOR_ODA_HPDAUniFabric This document introduces the HPDA 100, a high performance database appliance built by the NGENSTOR Alliance. It has two server platforms using either a proprietary 4-core 6.3GHz CPU or Intel Xeon E5 CPUs. Networking uses 40GbE and storage interfaces provide up to 22.4TB of raw PCIe SSD storage or integration with external storage arrays. Specs list configurations with 16-72 CPU cores, 256GB-6TB memory, and 22.4TB of raw internal SSD storage. The document provides an overview of the hardware under the hood and specifications of the HPDA 100 high performance database appliance.

SOUG_Deployment__Automation_DB

SOUG_Deployment__Automation_DBUniFabric This document discusses database deployment automation. It begins with introductions and an example of a problematic Friday deployment. It then reviews the concept of automation and different visions of it within an organization. Potential tools and frameworks for automation are discussed, along with common pitfalls. Basic deployment workflows using Oracle Cloud Control are demonstrated, including setting credentials, creating a proxy user, adding target properties, and using a job template. The document concludes by emphasizing that database deployment automation is possible but requires effort from multiple teams.

Hybrid Storage Pools (Now with the benefit of hindsight!)

Hybrid Storage Pools (Now with the benefit of hindsight!)ahl0003 This document discusses the history and current state of hybrid storage pools that combine disk and flash storage using ZFS. It notes that while flash was initially seen as a replacement for disk, disk still plays an important role. ZFS introduced the hybrid storage pool concept in 2007 to take advantage of flash's speed between DRAM and disk. The L2ARC feature caches data from disk on flash to improve performance but has issues with persistence and warmup times that need further work. Overall, hybrid storage pools that integrate disk and flash can provide benefits but developing the L2ARC and optimizing for real workloads remains an area for improvement.

FlashSQL 소개 & TechTalk

FlashSQL 소개 & TechTalkI Goo Lee MySQL PowerGroup Tech Seminar (2016.1) - 4.FlashSQL 소개 & TechTalk (by 이상원) - URL : cafe.naver.com/mysqlpg

Tuning Linux Windows and Firebird for Heavy Workload

Tuning Linux Windows and Firebird for Heavy WorkloadMarius Adrian Popa The document summarizes a presentation on optimizing Linux, Windows, and Firebird for heavy workloads. It describes two customer implementations using Firebird - a medical company with 17 departments and over 700 daily users, and a repair services company with over 500 daily users. It discusses tuning the operating system, hardware, CPU, RAM, I/O, network, and Firebird configuration to improve performance under heavy loads. Specific recommendations are provided for Linux and Windows configuration.

Sql saturday powerpoint dc_san

Sql saturday powerpoint dc_sanJoseph D'Antoni The document discusses storage area networks (SANs) for database administrators. It covers SAN components like fiber optic cards, switches, and disk arrays. RAID levels like 0, 1, 5, and 10 are explained along with their benefits and drawbacks. Solid state drives offer faster performance but at a higher cost. The document recommends placing tempdb and log files on separate high-performance disks. SANs provide benefits like easy capacity expansion, high availability, and disaster recovery through replication. DBAs should work with their SAN administrators to test performance and isolate databases and their files.

SSD Deployment Strategies for MySQL

SSD Deployment Strategies for MySQLYoshinori Matsunobu Slides for MySQL Conference & Expo 2010: http://en.oreilly.com/mysql2010/public/schedule/detail/13519

Eric Moreau - Samedi SQL - Backup dans Azure et BD hybrides

Eric Moreau - Samedi SQL - Backup dans Azure et BD hybridesMSDEVMTL 7 février 2015 Samedi SQL Sujet: Session 4 - Backup dans Azure, Bases de données hybrides (Éric Moreau) Cette session vous montrera comment prendre des backups de vos bases de données "on premises" vers Azure. Il vous montrera aussi comment utiliser des bases de données hybrides.

Cassandra Day Chicago 2015: DataStax Enterprise & Apache Cassandra Hardware B...

Cassandra Day Chicago 2015: DataStax Enterprise & Apache Cassandra Hardware B...DataStax Academy Speaker(s): Kathryn Erickson, Engineering at DataStax During this session we will discuss varying recommended hardware configurations for DSE. We’ll get right to the point and provide quick and solid recommendations up front. After we get the main points down take a brief tour of the history of database storage and then focus on designing a storage subsystem that won't let you down.

Ceph Day San Jose - Red Hat Storage Acceleration Utlizing Flash Technology

Ceph Day San Jose - Red Hat Storage Acceleration Utlizing Flash TechnologyCeph Community The document discusses three ways to accelerate application performance with flash storage using Ceph software defined storage: 1) utilizing all flash storage to maximize performance, 2) using a hybrid configuration with flash and HDDs to balance performance and capacity, and 3) using all HDD storage for maximum capacity but lowest performance. It also examines using NVMe SSDs versus SATA SSDs, and how to optimize Linux settings and Ceph configuration to improve flash performance for applications.

Demystifying Storage - Building large SANs

Demystifying Storage - Building large SANsDirecti Group From http://wiki.directi.com/x/hQAa - This is a fairly detailed presentation I made at BarCamp Mumbai on building large storage networks and different SAN topologies. It covers fundamentals of selecting harddrives, RAID levels and performance of various storage architectures. This is Part I of a 3-part series

Journey to Stability: Petabyte Ceph Cluster in OpenStack Cloud

Journey to Stability: Petabyte Ceph Cluster in OpenStack CloudPatrick McGarry Cisco Cloud Services provides an OpenStack platform to Cisco SaaS applications using a worldwide deployment of Ceph clusters storing petabytes of data. The initial Ceph cluster design experienced major stability problems as the cluster grew past 50% capacity. Strategies were implemented to improve stability including client IO throttling, backfill and recovery throttling, upgrading Ceph versions, adding NVMe journals, moving the MON levelDB to SSDs, rebalancing the cluster, and proactively detecting slow disks. Lessons learned included the importance of devops practices, sharing knowledge, rigorous testing, and balancing performance, cost and time.

How to deploy SQL Server on an Microsoft Azure virtual machines

How to deploy SQL Server on an Microsoft Azure virtual machinesSolarWinds Running apps on Microsoft Azure Virtual Machines is tempting; promising faster deployments and lower overall TCO. But how easy is it really to configure and run SQL Server in an Azure VM environment? Learn what you should know about tuning, optimizing, and key indicators for monitoring performance, as well as special considerations for High-Availability and Disaster Recovery.

Cassandra and Solid State Drives

Cassandra and Solid State DrivesRick Branson This document discusses how Cassandra's storage engine was optimized for spinning disks but remains well-suited for solid state drives. It describes how Cassandra uses LSM trees with sequential, append-only writes to disks, avoiding the random read/write patterns that cause issues for SSDs like write amplification and reduced lifetime from excessive garbage collection. While SSDs have benefits like fast random access, Cassandra's design circumvents problems they were meant to solve, keeping write amplification close to 1 and leveraging SSDs' fast sequential throughput.

Global Azure Virtual 2020 What's new on Azure IaaS for SQL VMs

Global Azure Virtual 2020 What's new on Azure IaaS for SQL VMsMarco Obinu Come dimensionare una VM per SQL Server in Azure IaaS, alla luce delle ultime novità della piattaforma.Sessione erogata il 24 Aprile 2020, nell'ambito del Global Azure Virtual 2020. Video sessione: https://youtu.be/7o80CJUtnh4 Demo: https://github.com/OmegaMadLab/SqlIaasVmPlayground ARM Template ottimizzato per SQL Server: https://github.com/OmegaMadLab/OptimizedSqlVm-v2

Using Windows Storage Spaces and iSCSI on Amazon EBS

Using Windows Storage Spaces and iSCSI on Amazon EBSLaroy Shtotland Using Windows Storage Spaces and iSCSI on Amazon EBS This presentation discusses using Windows Storage Spaces to pool multiple Amazon EBS volumes into large virtual drives. It describes potential use cases like backing up files to Storage Spaces volumes in AWS. However, Storage Spaces pools lack high availability since each EBS volume can only attach to one server. The presentation also covers security and performance considerations for using Storage Spaces with EBS.

SOUG_SDM_OracleDB_V3

SOUG_SDM_OracleDB_V3UniFabric Software Defined Memory (SDM) uses new technologies like non-volatile RAM and flash storage to treat memory and storage as a unified persistent resource without traditional performance tiers. This can optimize Oracle database I/O performance by bypassing buffer caches and using fast kernel threads. Benchmarks showed a Plexistor SDM solution outperforming a traditional two-node Oracle RAC cluster. The best approach is to use fast storage like 3D XPoint as the secondary tier to maintain high performance even with cache misses. Combining SDM with solutions like FlashGrid and Oracle RAC could provide extremely high performance.

Linux and H/W optimizations for MySQL

Linux and H/W optimizations for MySQLYoshinori Matsunobu This document summarizes optimizations for MySQL performance on Linux hardware. It covers SSD and memory performance impacts, file I/O, networking, and useful tools. The history of MySQL performance improvements is discussed from hardware upgrades like SSDs and more CPU cores to software optimizations like improved algorithms and concurrency. Optimizing per-server performance to reduce total servers needed is emphasized.

Design Tradeoffs for SSD Performance

Design Tradeoffs for SSD Performancejimmytruong Design Tradeoffs for SSD Performance discusses the key differences between rotating disks and solid state drives (SSDs) and important tradeoffs in SSD design. SSDs have no moving parts but operate differently than disks due to the nature of flash memory. SSD performance is impacted by write amplification from log-structured writing and wear from flash block erasure. Maximizing parallelism through techniques like striping and interleaving is important to improve SSD throughput. Wear-leveling is also critical to ensure even wear across flash blocks and avoid premature device failure.

2015 deploying flash in the data center

2015 deploying flash in the data centerHoward Marks Deploying Flash in the Data Center discusses various ways to deploy flash storage in the data center to improve performance. It describes all-flash arrays that provide the highest performance but also more expensive options like hybrid arrays that combine flash and disk. It also covers using flash in servers or as a cache to accelerate storage arrays. Choosing the best approach depends on factors like workload, budget, and existing infrastructure.

2015 deploying flash in the data center

2015 deploying flash in the data centerHoward Marks This document discusses deploying flash storage in the data center to improve storage performance. It begins with an overview of the performance gap between processors and disks. It then discusses all-flash arrays, hybrid arrays, server-side flash caching, and converged architectures as solutions. It provides details on flash memory types, form factors, and considerations for choosing a flash solution.

IaaS for DBAs in Azure

IaaS for DBAs in AzureKellyn Pot'Vin-Gorman This document provides guidance and best practices for migrating database workloads to infrastructure as a service (IaaS) in Microsoft Azure. It discusses choosing the appropriate virtual machine series and storage options to meet performance needs. The document emphasizes migrating the workload, not the hardware, and using cloud services to simplify management like automated patching and backup snapshots. It also recommends bringing existing monitoring and management tools to the cloud when possible rather than replacing them. The key takeaways are to understand the workload demands, choose optimal IaaS configurations, leverage cloud-enabled tools, and involve database experts when issues arise to address the root cause rather than just adding resources.

505 kobal exadata

505 kobal exadataKam Chan The Exadata X3 introduces new hardware with dramatically more and faster flash memory, more DRAM memory, faster CPUs, and more connectivity while maintaining the same price as the previous Exadata X2 platform. Key software enhancements include Exadata Smart Flash Write Caching which provides up to 20 times more write I/O performance, and Hybrid Columnar Compression which now supports write-back caching and provides storage savings of up to 15 times. The Exadata X3 provides higher performance, more storage capacity, and lower power usage compared to previous Exadata platforms.

Oracle Exadata Version 2

Oracle Exadata Version 2Jarod Wang The document describes Oracle Exadata Version 2, which is positioned as the world's fastest machine for online transaction processing (OLTP). Version 2 features faster CPUs, networking, disks, and flash storage compared to Version 1. It provides extreme performance for random I/O workloads through its use of solid-state drive (SSD) flash memory caches. Customers saw performance improvements of 10-72x on their queries with Exadata compared to previous systems.

More Related Content

What's hot (17)

Hybrid Storage Pools (Now with the benefit of hindsight!)

Hybrid Storage Pools (Now with the benefit of hindsight!)ahl0003 This document discusses the history and current state of hybrid storage pools that combine disk and flash storage using ZFS. It notes that while flash was initially seen as a replacement for disk, disk still plays an important role. ZFS introduced the hybrid storage pool concept in 2007 to take advantage of flash's speed between DRAM and disk. The L2ARC feature caches data from disk on flash to improve performance but has issues with persistence and warmup times that need further work. Overall, hybrid storage pools that integrate disk and flash can provide benefits but developing the L2ARC and optimizing for real workloads remains an area for improvement.

FlashSQL 소개 & TechTalk

FlashSQL 소개 & TechTalkI Goo Lee MySQL PowerGroup Tech Seminar (2016.1) - 4.FlashSQL 소개 & TechTalk (by 이상원) - URL : cafe.naver.com/mysqlpg

Tuning Linux Windows and Firebird for Heavy Workload

Tuning Linux Windows and Firebird for Heavy WorkloadMarius Adrian Popa The document summarizes a presentation on optimizing Linux, Windows, and Firebird for heavy workloads. It describes two customer implementations using Firebird - a medical company with 17 departments and over 700 daily users, and a repair services company with over 500 daily users. It discusses tuning the operating system, hardware, CPU, RAM, I/O, network, and Firebird configuration to improve performance under heavy loads. Specific recommendations are provided for Linux and Windows configuration.

Sql saturday powerpoint dc_san

Sql saturday powerpoint dc_sanJoseph D'Antoni The document discusses storage area networks (SANs) for database administrators. It covers SAN components like fiber optic cards, switches, and disk arrays. RAID levels like 0, 1, 5, and 10 are explained along with their benefits and drawbacks. Solid state drives offer faster performance but at a higher cost. The document recommends placing tempdb and log files on separate high-performance disks. SANs provide benefits like easy capacity expansion, high availability, and disaster recovery through replication. DBAs should work with their SAN administrators to test performance and isolate databases and their files.

SSD Deployment Strategies for MySQL

SSD Deployment Strategies for MySQLYoshinori Matsunobu Slides for MySQL Conference & Expo 2010: http://en.oreilly.com/mysql2010/public/schedule/detail/13519

Eric Moreau - Samedi SQL - Backup dans Azure et BD hybrides

Eric Moreau - Samedi SQL - Backup dans Azure et BD hybridesMSDEVMTL 7 février 2015 Samedi SQL Sujet: Session 4 - Backup dans Azure, Bases de données hybrides (Éric Moreau) Cette session vous montrera comment prendre des backups de vos bases de données "on premises" vers Azure. Il vous montrera aussi comment utiliser des bases de données hybrides.

Cassandra Day Chicago 2015: DataStax Enterprise & Apache Cassandra Hardware B...

Cassandra Day Chicago 2015: DataStax Enterprise & Apache Cassandra Hardware B...DataStax Academy Speaker(s): Kathryn Erickson, Engineering at DataStax During this session we will discuss varying recommended hardware configurations for DSE. We’ll get right to the point and provide quick and solid recommendations up front. After we get the main points down take a brief tour of the history of database storage and then focus on designing a storage subsystem that won't let you down.

Ceph Day San Jose - Red Hat Storage Acceleration Utlizing Flash Technology

Ceph Day San Jose - Red Hat Storage Acceleration Utlizing Flash TechnologyCeph Community The document discusses three ways to accelerate application performance with flash storage using Ceph software defined storage: 1) utilizing all flash storage to maximize performance, 2) using a hybrid configuration with flash and HDDs to balance performance and capacity, and 3) using all HDD storage for maximum capacity but lowest performance. It also examines using NVMe SSDs versus SATA SSDs, and how to optimize Linux settings and Ceph configuration to improve flash performance for applications.

Demystifying Storage - Building large SANs

Demystifying Storage - Building large SANsDirecti Group From http://wiki.directi.com/x/hQAa - This is a fairly detailed presentation I made at BarCamp Mumbai on building large storage networks and different SAN topologies. It covers fundamentals of selecting harddrives, RAID levels and performance of various storage architectures. This is Part I of a 3-part series

Journey to Stability: Petabyte Ceph Cluster in OpenStack Cloud

Journey to Stability: Petabyte Ceph Cluster in OpenStack CloudPatrick McGarry Cisco Cloud Services provides an OpenStack platform to Cisco SaaS applications using a worldwide deployment of Ceph clusters storing petabytes of data. The initial Ceph cluster design experienced major stability problems as the cluster grew past 50% capacity. Strategies were implemented to improve stability including client IO throttling, backfill and recovery throttling, upgrading Ceph versions, adding NVMe journals, moving the MON levelDB to SSDs, rebalancing the cluster, and proactively detecting slow disks. Lessons learned included the importance of devops practices, sharing knowledge, rigorous testing, and balancing performance, cost and time.

How to deploy SQL Server on an Microsoft Azure virtual machines

How to deploy SQL Server on an Microsoft Azure virtual machinesSolarWinds Running apps on Microsoft Azure Virtual Machines is tempting; promising faster deployments and lower overall TCO. But how easy is it really to configure and run SQL Server in an Azure VM environment? Learn what you should know about tuning, optimizing, and key indicators for monitoring performance, as well as special considerations for High-Availability and Disaster Recovery.

Cassandra and Solid State Drives

Cassandra and Solid State DrivesRick Branson This document discusses how Cassandra's storage engine was optimized for spinning disks but remains well-suited for solid state drives. It describes how Cassandra uses LSM trees with sequential, append-only writes to disks, avoiding the random read/write patterns that cause issues for SSDs like write amplification and reduced lifetime from excessive garbage collection. While SSDs have benefits like fast random access, Cassandra's design circumvents problems they were meant to solve, keeping write amplification close to 1 and leveraging SSDs' fast sequential throughput.

Global Azure Virtual 2020 What's new on Azure IaaS for SQL VMs

Global Azure Virtual 2020 What's new on Azure IaaS for SQL VMsMarco Obinu Come dimensionare una VM per SQL Server in Azure IaaS, alla luce delle ultime novità della piattaforma.Sessione erogata il 24 Aprile 2020, nell'ambito del Global Azure Virtual 2020. Video sessione: https://youtu.be/7o80CJUtnh4 Demo: https://github.com/OmegaMadLab/SqlIaasVmPlayground ARM Template ottimizzato per SQL Server: https://github.com/OmegaMadLab/OptimizedSqlVm-v2

Using Windows Storage Spaces and iSCSI on Amazon EBS

Using Windows Storage Spaces and iSCSI on Amazon EBSLaroy Shtotland Using Windows Storage Spaces and iSCSI on Amazon EBS This presentation discusses using Windows Storage Spaces to pool multiple Amazon EBS volumes into large virtual drives. It describes potential use cases like backing up files to Storage Spaces volumes in AWS. However, Storage Spaces pools lack high availability since each EBS volume can only attach to one server. The presentation also covers security and performance considerations for using Storage Spaces with EBS.

SOUG_SDM_OracleDB_V3

SOUG_SDM_OracleDB_V3UniFabric Software Defined Memory (SDM) uses new technologies like non-volatile RAM and flash storage to treat memory and storage as a unified persistent resource without traditional performance tiers. This can optimize Oracle database I/O performance by bypassing buffer caches and using fast kernel threads. Benchmarks showed a Plexistor SDM solution outperforming a traditional two-node Oracle RAC cluster. The best approach is to use fast storage like 3D XPoint as the secondary tier to maintain high performance even with cache misses. Combining SDM with solutions like FlashGrid and Oracle RAC could provide extremely high performance.

Linux and H/W optimizations for MySQL

Linux and H/W optimizations for MySQLYoshinori Matsunobu This document summarizes optimizations for MySQL performance on Linux hardware. It covers SSD and memory performance impacts, file I/O, networking, and useful tools. The history of MySQL performance improvements is discussed from hardware upgrades like SSDs and more CPU cores to software optimizations like improved algorithms and concurrency. Optimizing per-server performance to reduce total servers needed is emphasized.

Design Tradeoffs for SSD Performance

Design Tradeoffs for SSD Performancejimmytruong Design Tradeoffs for SSD Performance discusses the key differences between rotating disks and solid state drives (SSDs) and important tradeoffs in SSD design. SSDs have no moving parts but operate differently than disks due to the nature of flash memory. SSD performance is impacted by write amplification from log-structured writing and wear from flash block erasure. Maximizing parallelism through techniques like striping and interleaving is important to improve SSD throughput. Wear-leveling is also critical to ensure even wear across flash blocks and avoid premature device failure.

Similar to Presentation database on flash (20)

2015 deploying flash in the data center

2015 deploying flash in the data centerHoward Marks Deploying Flash in the Data Center discusses various ways to deploy flash storage in the data center to improve performance. It describes all-flash arrays that provide the highest performance but also more expensive options like hybrid arrays that combine flash and disk. It also covers using flash in servers or as a cache to accelerate storage arrays. Choosing the best approach depends on factors like workload, budget, and existing infrastructure.

2015 deploying flash in the data center

2015 deploying flash in the data centerHoward Marks This document discusses deploying flash storage in the data center to improve storage performance. It begins with an overview of the performance gap between processors and disks. It then discusses all-flash arrays, hybrid arrays, server-side flash caching, and converged architectures as solutions. It provides details on flash memory types, form factors, and considerations for choosing a flash solution.

IaaS for DBAs in Azure

IaaS for DBAs in AzureKellyn Pot'Vin-Gorman This document provides guidance and best practices for migrating database workloads to infrastructure as a service (IaaS) in Microsoft Azure. It discusses choosing the appropriate virtual machine series and storage options to meet performance needs. The document emphasizes migrating the workload, not the hardware, and using cloud services to simplify management like automated patching and backup snapshots. It also recommends bringing existing monitoring and management tools to the cloud when possible rather than replacing them. The key takeaways are to understand the workload demands, choose optimal IaaS configurations, leverage cloud-enabled tools, and involve database experts when issues arise to address the root cause rather than just adding resources.

505 kobal exadata

505 kobal exadataKam Chan The Exadata X3 introduces new hardware with dramatically more and faster flash memory, more DRAM memory, faster CPUs, and more connectivity while maintaining the same price as the previous Exadata X2 platform. Key software enhancements include Exadata Smart Flash Write Caching which provides up to 20 times more write I/O performance, and Hybrid Columnar Compression which now supports write-back caching and provides storage savings of up to 15 times. The Exadata X3 provides higher performance, more storage capacity, and lower power usage compared to previous Exadata platforms.

Oracle Exadata Version 2

Oracle Exadata Version 2Jarod Wang The document describes Oracle Exadata Version 2, which is positioned as the world's fastest machine for online transaction processing (OLTP). Version 2 features faster CPUs, networking, disks, and flash storage compared to Version 1. It provides extreme performance for random I/O workloads through its use of solid-state drive (SSD) flash memory caches. Customers saw performance improvements of 10-72x on their queries with Exadata compared to previous systems.

Ceph Day Tokyo -- Ceph on All-Flash Storage

Ceph Day Tokyo -- Ceph on All-Flash StorageCeph Community SanDisk's presentation from Ceph Day APAC Roadshow in Tokyo. http://ceph.com/cephdays/ceph-day-apac-roadshow-tokyo/

Accelerating HBase with NVMe and Bucket Cache

Accelerating HBase with NVMe and Bucket CacheNicolas Poggi on-Volatile-Memory express (NVMe) standard promises and order of magnitude faster storage than regular SSDs, while at the same time being more economical than regular RAM on TB/$. This talk evaluates the use cases and benefits of NVMe drives for its use in Big Data clusters with HBase and Hadoop HDFS. First, we benchmark the different drives using system level tools (FIO) to get maximum expected values for each different device type and set expectations. Second, we explore the different options and use cases of HBase storage and benchmark the different setups. And finally, we evaluate the speedups obtained by the NVMe technology for the different Big Data use cases from the YCSB benchmark. In summary, while the NVMe drives show up to 8x speedup in best case scenarios, testing the cost-efficiency of new device technologies is not straightforward in Big Data, where we need to overcome system level caching to measure the maximum benefits.

S016828 storage-tiering-nola-v1710b

S016828 storage-tiering-nola-v1710bTony Pearson This document discusses techniques for implementing storage tiering to simplify management, lower costs, and increase performance. It describes using IBM's Easy Tier technology to automatically move data between tiers of flash, disk, and tape storage based on I/O density and age. The tiers include flash, solid state drives, enterprise HDDs, and nearline HDDs. Easy Tier measures activity every 5 minutes and moves hot data to faster tiers and cold data to slower tiers with little administration needed. Case studies show how storage tiering saved IBM Global Accounts $17 million in one year and $90 million over 5 years by optimizing data placement across tiers.

In-memory Data Management Trends & Techniques

In-memory Data Management Trends & TechniquesHazelcast - Hardware trends like increasing cores/CPU and RAM sizes enable in-memory data management techniques. Commodity servers can now support terabytes of memory. - Different levels of data storage have vastly different access times, from registers (<1ns) to disk (4-7ms). Caching data in faster levels of storage improves performance. - Techniques to exploit data locality, cache hierarchies, tiered storage, parallelism and in-situ processing can help overcome hardware limitations and achieve fast, real-time processing. Emerging in-memory databases use these techniques to enable new types of operational analytics.

Sun storage tek 6140 technical presentation

Sun storage tek 6140 technical presentationxKinAnx This technical presentation describes the Sun StorageTek 6140 array, a new storage array that provides faster performance, greater flexibility and scalability, and robust data protection capabilities. It is available in configurations with 2GB or 4GB of cache that can scale up to 64 or 112 drives respectively. The array offers improved features over previous generations such as an end-to-end 4Gb/s infrastructure and faster estimated performance specs. It also includes data services software for replication, snapshots, and cloning along with easy centralized management.

Need for Speed: Using Flash Storage to Optimise Performance and Reduce Costs

Need for Speed: Using Flash Storage to Optimise Performance and Reduce CostsNetApp The document discusses the evolution of disk storage technologies over time from 1956 to 2013, noting massive increases in capacity and performance. It then summarizes the performance characteristics of SAS, SATA, and SSD technologies. The rest of the document discusses different flash storage solutions including all-flash arrays, server flash caching, and hybrid arrays. It provides examples of NetApp's flash portfolio and customer cases that demonstrate the performance and efficiency benefits of its flash technologies.

Need For Speed- Using Flash Storage to optimise performance and reduce costs-...

Need For Speed- Using Flash Storage to optimise performance and reduce costs-...NetAppUK Flash Storage technologies are opening up a wealth of new opportunities for improving the optimisation of applications, data and storage, as well as reducing costs. In this session, Peter Mason, NetApp Consulting Systems Engineer, shares his experiences and discusses the use and impact of different Flash technologies.

Red Hat Storage Day Dallas - Red Hat Ceph Storage Acceleration Utilizing Flas...

Red Hat Storage Day Dallas - Red Hat Ceph Storage Acceleration Utilizing Flas...Red_Hat_Storage Red Hat Ceph Storage can utilize flash technology to accelerate applications in three ways: 1) utilize flash caching to accelerate critical data writes and reads, 2) utilize storage tiering to place performance critical data on flash and less critical data on HDDs, and 3) utilize all-flash storage to accelerate performance when all data is critical or caching/tiering cannot be used. The document then discusses best practices for leveraging NVMe SSDs versus SATA SSDs in Ceph configurations and optimizing Linux settings.

Implementation of Dense Storage Utilizing HDDs with SSDs and PCIe Flash Acc...

Implementation of Dense Storage Utilizing HDDs with SSDs and PCIe Flash Acc...Red_Hat_Storage At Red Hat Storage Day New York on 1/19/16, Red Hat partner Seagate presented on how to implement dense storage using HDDs with SSDs and PCIe flash accelerator cards.

Oracle Performance On Linux X86 systems

Oracle Performance On Linux X86 systems Baruch Osoveskiy On X86 systems, using an Unbreakable Enterprise Kernel (UEK) is recommended over other enterprise distributions as it provides better hardware support, security patches, and testing from the larger Linux community. Key configuration recommendations include enabling maximum CPU performance in BIOS, using memory types validated by Oracle, ensuring proper NUMA and CPU frequency settings, and installing only Oracle-validated packages to avoid issues. Monitoring tools like top, iostat, sar and ksar help identify any CPU, memory, disk or I/O bottlenecks.

Red Hat Ceph Storage Acceleration Utilizing Flash Technology

Red Hat Ceph Storage Acceleration Utilizing Flash Technology Red_Hat_Storage Red Hat Ceph Storage can utilize flash technology to accelerate applications in three ways: 1) use all flash storage for highest performance, 2) use a hybrid configuration with performance critical data on flash tier and colder data on HDD tier, or 3) utilize host caching of critical data on flash. Benchmark results showed that using NVMe SSDs in Ceph provided much higher performance than SATA SSDs, with speed increases of up to 8x for some workloads. However, testing also showed that Ceph may not be well-suited for OLTP MySQL workloads due to small random reads/writes, as local SSD storage outperformed the Ceph cluster. Proper Linux tuning is also needed to maximize SSD performance within

Azure Databases with IaaS

Azure Databases with IaaSKellyn Pot'Vin-Gorman This document discusses best practices for migrating database workloads to Azure Infrastructure as a Service (IaaS). Some key points include: - Choosing the appropriate VM series like E or M series optimized for database workloads. - Using availability zones and geo-redundant storage for high availability and disaster recovery. - Sizing storage correctly based on the database's input/output needs and using premium SSDs where needed. - Migrating existing monitoring and management tools to the cloud to provide familiarity and automating tasks like backups, patching, and problem resolution.

Server side caching Vs other alternatives

Server side caching Vs other alternativesBappaditya Sinha Virtunet Systems provides a software-only performance tier called VirtuCache that caches frequently used data from any SAN-based storage appliance onto SSDs located within the host server. This increases application performance, allows more VMs per host, and postpones the need for SAN upgrades. Compared to upgrading the SAN or storage appliance, VirtuCache provides lower latency by placing SSDs closer to the CPU and is more affordable since it uses retail SSDs rather than proprietary, vendor-locked SSDs.

Ceph Day Taipei - Ceph on All-Flash Storage

Ceph Day Taipei - Ceph on All-Flash Storage Ceph Community The document discusses Ceph storage performance on all-flash storage systems. It notes that Ceph was originally optimized for HDDs and required tuning and algorithm changes to achieve flash-level performance. SanDisk worked with the Ceph community to optimize the object storage daemon (OSD) for flash, improving read and write throughput. Benchmark results show the SanDisk InfiniFlash system delivering over 1 million IOPS and 15GB/s throughput using Ceph software. Reference configurations provide guidance on hardware requirements for small, medium, and large workloads.

Ceph Day KL - Ceph on All-Flash Storage

Ceph Day KL - Ceph on All-Flash Storage Ceph Community The document discusses Ceph storage performance on all-flash storage systems. It describes how SanDisk optimized Ceph for all-flash environments by tuning the OSD to handle the high performance of flash drives. The optimizations allowed over 200,000 IOPS per OSD using 12 CPU cores. Testing on SanDisk's InfiniFlash storage system showed it achieving over 1.5 million random read IOPS and 200,000 random write IOPS at 64KB block size. Latency was also very low, with 99% of operations under 5ms for reads. The document outlines reference configurations for the InfiniFlash system optimized for small, medium and large workloads.

More from xKinAnx (20)

Engage for success ibm spectrum accelerate 2

Engage for success ibm spectrum accelerate 2xKinAnx IBM Spectrum Accelerate is software that extends the capabilities of IBM's XIV storage system, such as consistent performance tuning-free, to new delivery models. It provides enterprise storage capabilities deployed in minutes instead of months. Spectrum Accelerate runs the proven XIV software on commodity x86 servers and storage, providing similar features and functions to an XIV system. It offers benefits like business agility, flexibility, simplified acquisition and deployment, and lower administration and training costs.

Accelerate with ibm storage ibm spectrum virtualize hyper swap deep dive

Accelerate with ibm storage ibm spectrum virtualize hyper swap deep divexKinAnx The document provides an overview of IBM Spectrum Virtualize HyperSwap functionality. HyperSwap allows host I/O to continue accessing volumes across two sites without interruption if one site fails. It uses synchronous remote copy between two I/O groups to make volumes accessible across both groups. The document outlines the steps to configure a HyperSwap configuration, including naming sites, assigning nodes and hosts to sites, and defining the topology.

Software defined storage provisioning using ibm smart cloud

Software defined storage provisioning using ibm smart cloudxKinAnx This document provides an overview of software-defined storage provisioning using IBM SmartCloud Virtual Storage Center (VSC). It discusses the typical challenges with manual storage provisioning, and how VSC addresses those challenges through automation. VSC's storage provisioning involves three phases - setup, planning, and execution. The setup phase involves adding storage devices, servers, and defining service classes. In the planning phase, VSC creates a provisioning plan based on the request. In the execution phase, the plan is run to automatically complete all configuration steps. The document highlights how VSC optimizes placement and streamlines the provisioning process.

Ibm spectrum virtualize 101

Ibm spectrum virtualize 101 xKinAnx This document discusses IBM Spectrum Virtualize 101 and IBM Spectrum Storage solutions. It provides an overview of software defined storage and IBM Spectrum Virtualize, describing how it achieves storage virtualization and mobility. It also provides details on the new IBM Spectrum Virtualize DH8 hardware platform, including its performance improvements over previous platforms and support for compression acceleration.

Accelerate with ibm storage ibm spectrum virtualize hyper swap deep dive dee...

Accelerate with ibm storage ibm spectrum virtualize hyper swap deep dive dee...xKinAnx HyperSwap provides high availability by allowing volumes to be accessible across two IBM Spectrum Virtualize systems in a clustered configuration. It uses synchronous remote copy to replicate primary and secondary volumes between the two systems, making the volumes appear as a single object to hosts. This allows host I/O to continue if an entire system fails without any data loss. The configuration requires a quorum disk in a third site for the cluster to maintain coordination and survive failures across the two main sites.

04 empalis -ibm_spectrum_protect_-_strategy_and_directions

04 empalis -ibm_spectrum_protect_-_strategy_and_directionsxKinAnx IBM Spectrum Protect (formerly IBM Tivoli Storage Manager) provides data protection and recovery for hybrid cloud environments. This document summarizes a presentation on IBM's strategic direction for Spectrum Protect, including plans to enhance the product to better support hybrid cloud, virtual environments, large-scale deduplication, simplified management, and protection for key workloads. The presentation outlines roadmap features for 2015 and potential future enhancements.

Ibm spectrum scale fundamentals workshop for americas part 1 components archi...

Ibm spectrum scale fundamentals workshop for americas part 1 components archi...xKinAnx The document provides instructions for installing and configuring Spectrum Scale 4.1. Key steps include: installing Spectrum Scale software on nodes; creating a cluster using mmcrcluster and designating primary/secondary servers; verifying the cluster status with mmlscluster; creating Network Shared Disks (NSDs); and creating a file system. The document also covers licensing, system requirements, and IBM and client responsibilities for installation and maintenance.

Ibm spectrum scale fundamentals workshop for americas part 2 IBM Spectrum Sca...

Ibm spectrum scale fundamentals workshop for americas part 2 IBM Spectrum Sca...xKinAnx This document discusses quorum nodes in Spectrum Scale clusters and recovery from failures. It describes how quorum nodes determine the active cluster and prevent partitioning. The document outlines best practices for quorum nodes and provides steps to recover from loss of a quorum node majority or failure of the primary and secondary configuration servers.

Ibm spectrum scale fundamentals workshop for americas part 3 Information Life...

Ibm spectrum scale fundamentals workshop for americas part 3 Information Life...xKinAnx IBM Spectrum Scale can help achieve ILM efficiencies through policy-driven, automated tiered storage management. The ILM toolkit manages file sets and storage pools and automates data management. Storage pools group similar disks and classify storage within a file system. File placement and management policies determine file placement and movement based on rules.

Ibm spectrum scale fundamentals workshop for americas part 4 Replication, Str...

Ibm spectrum scale fundamentals workshop for americas part 4 Replication, Str...xKinAnx The document provides an overview of IBM Spectrum Scale Active File Management (AFM). AFM allows data to be accessed globally across multiple clusters as if it were local by automatically managing asynchronous replication. It describes the various AFM modes including read-only caching, single-writer, and independent writer. It also covers topics like pre-fetching data, cache eviction, cache states, expiration of stale data, and the types of data transferred between home and cache sites.

Ibm spectrum scale fundamentals workshop for americas part 4 spectrum scale_r...

Ibm spectrum scale fundamentals workshop for americas part 4 spectrum scale_r...xKinAnx This document provides information about replication and stretch clusters in IBM Spectrum Scale. It defines replication as synchronously copying file system data across failure groups for redundancy. While replication improves availability, it reduces performance and increases storage usage. Stretch clusters combine two or more clusters to create a single large cluster, typically using replication between sites. Replication policies and failure group configuration are important to ensure effective data duplication.

Ibm spectrum scale fundamentals workshop for americas part 5 spectrum scale_c...

Ibm spectrum scale fundamentals workshop for americas part 5 spectrum scale_c...xKinAnx This document provides information about clustered NFS (cNFS) in IBM Spectrum Scale. cNFS allows multiple Spectrum Scale servers to share a common namespace via NFS, providing high availability, performance, scalability and a single namespace as storage capacity increases. The document discusses components of cNFS including load balancing, monitoring, and failover. It also provides instructions for prerequisites, setup, administration and tuning of a cNFS configuration.

Ibm spectrum scale fundamentals workshop for americas part 6 spectrumscale el...

Ibm spectrum scale fundamentals workshop for americas part 6 spectrumscale el...xKinAnx This document provides an overview of managing Spectrum Scale opportunity discovery and working with external resources to be successful. It discusses how to build presentations and configurations to address technical and philosophical solution requirements. The document introduces IBM Spectrum Scale as providing low latency global data access, linear scalability, and enterprise storage services on standard hardware for on-premise or cloud deployments. It also discusses Spectrum Scale and Elastic Storage Server, noting the latter is a hardware building block with GPFS 4.1 installed. The document provides tips for discovering opportunities through RFPs, RFIs, events, workshops, and engaging clients to understand their needs in order to build compelling proposal information.

Ibm spectrum scale fundamentals workshop for americas part 7 spectrumscale el...

Ibm spectrum scale fundamentals workshop for americas part 7 spectrumscale el...xKinAnx This document provides guidance on sizing and configuring Spectrum Scale and Elastic Storage Server solutions. It discusses collecting information from clients such as use cases, workload characteristics, capacity and performance goals, and infrastructure requirements. It then describes using tools to help architect solutions that meet the client's needs, such as breaking the problem down, addressing redundancy and high availability, and accounting for different sites, tiers, clients and protocols. The document also provides tips for working with the configuration tool and pricing the solution appropriately.

Ibm spectrum scale fundamentals workshop for americas part 8 spectrumscale ba...

Ibm spectrum scale fundamentals workshop for americas part 8 spectrumscale ba...xKinAnx The document provides an overview of key concepts covered in a GPFS 4.1 system administration course, including backups using mmbackup, SOBAR integration, snapshots, quotas, clones, and extended attributes. The document includes examples of commands and procedures for administering these GPFS functions.

Ibm spectrum scale fundamentals workshop for americas part 5 ess gnr-usecases...

Ibm spectrum scale fundamentals workshop for americas part 5 ess gnr-usecases...xKinAnx This document provides an overview of Spectrum Scale 4.1 system administration. It describes the Elastic Storage Server options and components, Spectrum Scale native RAID (GNR), and tips for best practices. GNR implements sophisticated data placement and error correction algorithms using software RAID to provide high reliability and performance without additional hardware. It features auto-rebalancing, low rebuild overhead through declustering, and end-to-end data checksumming.

Presentation disaster recovery in virtualization and cloud

Presentation disaster recovery in virtualization and cloudxKinAnx Download & Share Technology Presentations http://ouo.io/XKLUj Student Guide & Best http://ouo.io/8u1RP

Presentation disaster recovery for oracle fusion middleware with the zfs st...

Presentation disaster recovery for oracle fusion middleware with the zfs st...xKinAnx Download & Share Technology Presentations http://ouo.io/XKLUj Student Guide & Best http://ouo.io/8u1RP

Presentation differentiated virtualization for enterprise clouds, large and...

Presentation differentiated virtualization for enterprise clouds, large and...xKinAnx Download & Share Technology Presentations http://ouo.io/XKLUj Student Guide & Best http://ouo.io/8u1RP

Presentation desktops for the cloud the view rollout

Presentation desktops for the cloud the view rolloutxKinAnx Download & Share Technology Presentations http://ouo.io/XKLUj Student Guide & Best http://ouo.io/8u1RP

Recently uploaded (20)

Flow graphs and Path testing,path predicates and achievable paths

Flow graphs and Path testing,path predicates and achievable pathsRajalingam Balakrishnan path sensitizing, path instrumentation, application of path testing.

What is Agnetic AI : An Introduction to AI Agents

What is Agnetic AI : An Introduction to AI AgentsTechtic Solutions Introducing Agnetic AI: Redefining Intelligent Customer Engagement for the Future of Business In a world where data is abundant but actionable insights are scarce, Agnetic AI emerges as a transformative force in AI-powered customer engagement and predictive intelligence solutions. Our cutting-edge platform harnesses the power of machine learning, natural language processing, and real-time analytics to help businesses drive deeper connections, streamline operations, and unlock unprecedented growth. Whether you're a forward-thinking startup or an enterprise scaling globally, Agnetic AI is designed to automate customer journeys, personalize interactions at scale, and deliver insights that move the needle. Built for performance, agility, and results, this AI solution isn’t just another tool—it’s your competitive advantage in the age of intelligent automation.

Assuring Your SD-WAN to Deliver Unparalleled Digital Experiences

Assuring Your SD-WAN to Deliver Unparalleled Digital ExperiencesThousandEyes Presented by Jonathan Zarkower

ISTQB Foundation Level – Chapter 4: Test Design Techniques

ISTQB Foundation Level – Chapter 4: Test Design Techniqueszubair khan This presentation covers Chapter 4: Test Design Techniques from the ISTQB Foundation Level syllabus. It breaks down core concepts in a simple, visual, and easy-to-understand format — perfect for beginners and those preparing for the ISTQB exam. ✅ Topics covered: Static and dynamic test techniques Black-box testing (Equivalence Partitioning, Boundary Value Analysis, Decision Tables, State Transition Testing, etc.) White-box testing (Statement and Decision coverage) Experience-based techniques (Exploratory Testing, Error Guessing, Checklists) Choosing appropriate test design techniques based on context 🎓 Whether you're studying for the ISTQB certification or looking to strengthen your software testing fundamentals, these slides will guide you through the essential test design techniques with clarity and real-world relevance.

Paths, Path products and Regular expressions: path products & path expression...

Paths, Path products and Regular expressions: path products & path expression...Rajalingam Balakrishnan Paths, Path products and Regular expressions: path products & path expression, reduction procedure, applications, regular expressions & flow anomaly detection

Master Logical Volume Management - RHCSA+.pdf

Master Logical Volume Management - RHCSA+.pdfRHCSA Guru Learn how to create, resize, and manage logical volumes - part of RHCSA syllabus

Assuring Your SD-WAN to Deliver Unparalleled Digital Experiences

Assuring Your SD-WAN to Deliver Unparalleled Digital ExperiencesThousandEyes Presented by Jonathan Zarkower

Introduction to LLM Post-Training - MIT 6.S191 2025

Introduction to LLM Post-Training - MIT 6.S191 2025Maxime Labonne In this talk, we will cover the fundamentals of modern LLM post-training at various scales with concrete examples. High-quality data generation is at the core of this process, focusing on the accuracy, diversity, and complexity of the training samples. We will explore key training techniques, including supervised fine-tuning, preference alignment, and model merging. The lecture will delve into evaluation frameworks with their pros and cons for measuring model performance. We will conclude with an overview of emerging trends in post-training methodologies and their implications for the future of LLM development.

Collab Space by SIB (Simple Is Beautiful)

Collab Space by SIB (Simple Is Beautiful)SipkyJayaPutra "Collab Space is an innovative collaboration platform designed to streamline teamwork, foster creativity, and enhance productivity. Whether you're working with colleagues, clients, or partners, Collab Space provides the tools you need to communicate effectively, manage projects effortlessly, and collaborate in real time—from anywhere in the world."

EIS-Manufacturing-AI–Product-Data-Optimization-Webinar-2025.pptx

EIS-Manufacturing-AI–Product-Data-Optimization-Webinar-2025.pptxEarley Information Science Manufacturing organizations are under constant pressure to streamline operations, improve agility, and make better use of the data they already have. Yet, many teams still struggle with disconnected systems and fragmented information that slow decision-making and reduce productivity. This webinar explores how AI-powered search and structured metadata can address these challenges by making enterprise data more accessible, actionable, and aligned with business needs. Participants will gain practical insights into how modern search technologies are being applied to unify data across platforms, improve findability, and surface hidden insights—all without replacing core systems. Whether you're responsible for IT infrastructure, operations, or digital transformation, this session offers strategies to reduce friction and get more value from your existing information ecosystem. Key Topics Covered: The realities of managing disparate data in manufacturing and business operations Leveraging AI to improve data discoverability and support better decision-making Using structured metadata to unlock insights from existing platforms Strategies for deploying intelligent search solutions across enterprise systems "It's not magic, folks. It really does need that data. Now, what we can do is we can accelerate this. We can accelerate the derivation of an information architecture product, data architecture, content architecture, knowledge architecture, and apply it to the content, to the product data, to whatever it is."- Seth Earley "You can have the best systems in the world, but if your teams are still spending hours finding specs and product data, that investment all just sits there idle." - Crys Black

The Gold Jacket Journey - How I passed 12 AWS Certs without Burning Out (and ...

The Gold Jacket Journey - How I passed 12 AWS Certs without Burning Out (and ...VictorSzoltysek Only a few hundred people on the planet have done this — and even fewer have documented the journey like this. In just one year, I passed all 12 AWS certifications and earned the ultra-rare AWS Gold Jacket — without burning out, without quitting my job, and without wasting hours on fluff. My secret? A completely AI-powered study workflow using ChatGPT, custom prompts, and a technique I call DeepResearch — a strategy that pulls high-signal insights from Reddit, blogs, and real-world exam feedback to shortcut the noise and fast-track what actually matters. This is the slide deck from my live talk — it breaks down everything: ✅ How I used ChatGPT to quiz, explain, and guide me ✅ How DeepResearch helped me prioritize the right content ✅ My top 80/20 study tips, service-specific rules of thumb, and real-world exam traps ✅ The surprising things that still trip up even experienced cloud teams If you’re considering AWS certifications — or want to learn how to study smarter using AI — this is your blueprint.

Autopilot for Everyone Series - Session 3: Exploring Real-World Use Cases

Autopilot for Everyone Series - Session 3: Exploring Real-World Use CasesUiPathCommunity Welcome to 'Autopilot for Everyone Series' - Session 3: Exploring Real-World Use Cases! Join us for an interactive session where we explore real-world use cases of UiPath Autopilot, the AI-powered automation assistant. 📕 In this engaging event, we will: - demonstrate how UiPath Autopilot enhances productivity by combining generative AI, machine learning, and automation to streamline business processes - discover how UiPath Autopilot enables intelligent task automation with natural language inputs and AI-powered decision-making for smarter workflows Whether you're new to automation or a seasoned professional, don't miss out on this opportunity to transform your approach to business automation. Register now and step into the future of efficient work processes!

Presentation Session 5 Transition roadmap.pdf

Presentation Session 5 Transition roadmap.pdfMukesh Kala Transition roadmap and real-world multi-agent systems

How to Add Kaspersky to Another Computer?

How to Add Kaspersky to Another Computer?Kevin Pierce Users may need to add Kaspersky to another computer for several reasons. They might need to protect their device from online threats like ransomware, malware, and phishing. Adding Kaspersky to another computer can help enhance security features, ensuring your device is protected from various cyber threats.

LVM Management & Disaster Recovery - RHCSA+.pdf

LVM Management & Disaster Recovery - RHCSA+.pdfRHCSA Guru Master LVM management and disaster recovery techniques, including snapshots and backups, essential for RHCSA+ and real-world Linux administration.

"Smarter, Faster, Autonomous: A Deep Dive into Agentic AI & Digital Agents"

"Smarter, Faster, Autonomous: A Deep Dive into Agentic AI & Digital Agents"panktiskywinds12 Discover how Agentic AI and AI Agents are revolutionizing business automation. This presentation introduces the core concepts behind machines that can plan, learn, and act autonomously—without constant human input. Learn what makes an AI Agent more than just a bot, and explore their real-world applications in customer support, supply chains, finance, and marketing. We’ll also cover the challenges businesses must navigate and how to get started with frameworks.

Beginners: Radio Frequency, Band and Spectrum (V3)

Beginners: Radio Frequency, Band and Spectrum (V3)3G4G Welcome to this tutorial where we break down the complex topic of radio spectrum in a clear and accessible way. In this video, we explore: ✅ What is spectrum, frequency, and bandwidth? ✅ How does wavelength affect antenna design? ✅ The difference between FDD and TDD ✅ 5G spectrum ranges – FR1 and FR2 ✅ The role of mmWave, and why it's misunderstood ✅ What makes 5G Non-Standalone (NSA) different from 5G Standalone (SA) ✅ Concepts like Carrier Aggregation, Dual Connectivity, and Dynamic Spectrum Sharing (DSS) ✅ Why spectrum refarming is critical for modern mobile networks ✅ Evolution of antennas from legacy networks to Massive MIMO Whether you're just getting started with wireless technology or brushing up on the latest in 5G and beyond, this video is designed to help you learn and stay up to date. 👍 Like the video if you find it helpful 🔔 Subscribe for more tutorials on 5G, 6G, and mobile technology 💬 Drop your questions or comments below—we’d love to hear from you! All our #3G4G5G slides, videos, blogs and tutorials are available at: Tutorials: https://www.3g4g.co.uk/Training/ Videos: https://www.youtube.com/3G4G5G Slides: https://www.slideshare.net/3G4GLtd Our channels: 3G4G Website – https://www.3g4g.co.uk/ The 3G4G Blog – https://blog.3g4g.co.uk/ Telecoms Infrastructure Blog – https://www.telecomsinfrastructure.com/ Operator Watch Blog – https://www.operatorwatch.com/ Connectivity Technology Blog – https://www.connectivity.technology/ Free 5G Training – https://www.free5gtraining.com/ Free 6G Training – https://www.free6gtraining.com/ Private Networks Technology Blog - https://blog.privatenetworks.technology/

UiPath Automation Developer Associate 2025 Series - Career Office Hours

UiPath Automation Developer Associate 2025 Series - Career Office HoursDianaGray10 This event is being scheduled to check on your progress with your self-paced study curriculum. We will be here to answer any questions you have about the training and next steps for your career

Microsoft Power Platform in 2025_Piyush Gupta_.pptx

Microsoft Power Platform in 2025_Piyush Gupta_.pptxPiyush Gupta This deck is a quick tutorial to the Microsoft's Power Platform. It gives details about its key components - Power Automate, Power Apps, Power BI, Power Pages and Copilot Studio. It talks about its capabilities - Dataverse, Power FX, AI Builder, Connectors. It also provide further learning resources and certification information.

Paths, Path products and Regular expressions: path products & path expression...

Paths, Path products and Regular expressions: path products & path expression...Rajalingam Balakrishnan

Presentation database on flash

- 1. This is your Database on Flash: Insights from Oracle Development

- 2. Disclaimer THE FOLLOWING IS INTENDED TO OUTLINE OUR GENERAL PRODUCT DIRECTION. IT IS INTENDED FOR INFORMATION PURPOSES ONLY, AND MAY NOT BE INCORPORATED INTO ANY CONTRACT. IT IS NOT A COMMITMENT TO DELIVER ANY MATERIAL, CODE, OR FUNCTIONALITY, AND SHOULD NOT BE RELIED UPON IN MAKING PURCHASING DECISION. THE DEVELOPMENT, RELEASE, AND TIMING OF ANY FEATURES OR FUNCTIONALITY DESCRIBED FOR ORACLE'S PRODUCTS REMAINS AT THE SOLE DISCRETION OF ORACLE. Garret Swart Roopa Agrawal Sumeet Lahorani Kiran Goyal

- 3. The Promise of Flash • Replace expensive 15K RPM disks with fewer Solid State devices • Reduce failures & replacement costs • Reduce cost of Storage subsystem • Reduce energy costs • Lower transaction & replication latencies by eliminating seeks and rotational delays • Replace power hungry, hard-to-scale DRAM with denser, cheaper devices • Reduce cost of memory subsystem • Reduce energy costs • Reduce boot/warm-up time

- 4. SSD’s have been around for years: What’s different? • The old SSD market was latency driven • The drives were quite expensive • Consumer applications have driven Flash prices down • The new SSD market is latency and $/IOPS driven

- 5. What will we learn today? • Lots of innovative Flash products • Why they are tricky to evaluate • Which Oracle workloads can benefit from Flash today • How to use IO Intensity to assign objects to storage tiers • Oracle Performance results

- 6. A Cambrian Explosion of Diversity: In Flash Technology • 530,000,000 years ago: • Now:

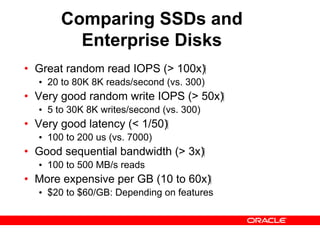

- 7. Comparing SSDs and Enterprise Disks • Great random read IOPS (> 100x) • 20 to 80K 8K reads/second (vs. 300) • Very good random write IOPS (> 50x) • 5 to 30K 8K writes/second (vs. 300) • Very good latency (< 1/50) • 100 to 200 us (vs. 7000) • Good sequential bandwidth (> 3x) • 100 to 500 MB/s reads • More expensive per GB (10 to 60x) • $20 to $60/GB: Depending on features

- 8. Outline • The Flash Promises • Flash Technology • Flash Products • DB Workloads that are good for Flash • Flash Results

- 9. Flash Technology • Two types: NAND and NOR • Both driven by consumer technology • NAND: Optimized for cameras and MP3 players • Density, sequential access • Page read, Page program, Block erase • NOR: Optimized for device boot device • Single word access & XIP • Word read, Word program, Block erase • Both being adopted for Enterprise use

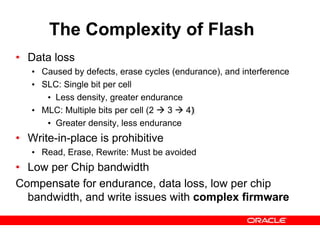

- 10. The Complexity of Flash • Data loss • Caused by defects, erase cycles (endurance), and interference • SLC: Single bit per cell • Less density, greater endurance • MLC: Multiple bits per cell (2 3 4) • Greater density, less endurance • Write-in-place is prohibitive • Read, Erase, Rewrite: Must be avoided • Low per Chip bandwidth Compensate for endurance, data loss, low per chip bandwidth, and write issues with complex firmware

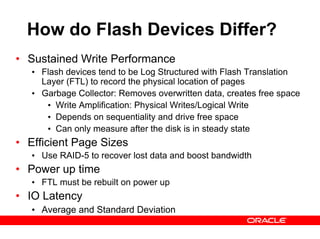

- 11. How do Flash Devices Differ? • Sustained Write Performance • Flash devices tend to be Log Structured with Flash Translation Layer (FTL) to record the physical location of pages • Garbage Collector: Removes overwritten data, creates free space • Write Amplification: Physical Writes/Logical Write • Depends on sequentiality and drive free space • Can only measure after the disk is in steady state • Efficient Page Sizes • Use RAID-5 to recover lost data and boost bandwidth • Power up time • FTL must be rebuilt on power up • IO Latency • Average and Standard Deviation

- 12. Outline • The Flash Promises • Flash Technology • Flash Products • DB Workloads that are good for Flash • Flash Results

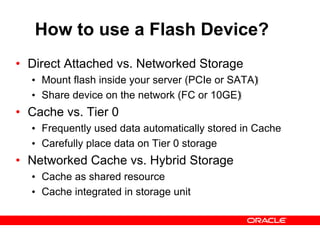

- 13. How to use a Flash Device? • Direct Attached vs. Networked Storage • Mount flash inside your server (PCIe or SATA) • Share device on the network (FC or 10GE) • Cache vs. Tier 0 • Frequently used data automatically stored in Cache • Carefully place data on Tier 0 storage • Networked Cache vs. Hybrid Storage • Cache as shared resource • Cache integrated in storage unit

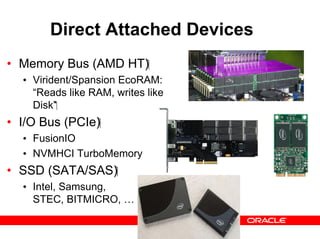

- 14. Direct Attached Devices • Memory Bus (AMD HT) • Virident/Spansion EcoRAM: “Reads like RAM, writes like Disk” • I/O Bus (PCIe) • FusionIO • NVMHCI TurboMemory • SSD (SATA/SAS) • Intel, Samsung, STEC, BITMICRO, …

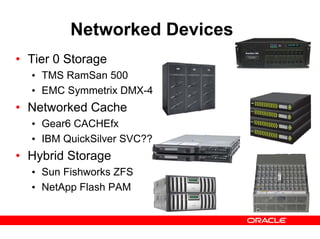

- 15. Networked Devices • Tier 0 Storage • TMS RamSan 500 • EMC Symmetrix DMX-4 • Networked Cache • Gear6 CACHEfx • IBM QuickSilver SVC?? • Hybrid Storage • Sun Fishworks ZFS • NetApp Flash PAM

- 16. Outline • The Flash Promises • Flash Technology • Flash Products • DB Workloads that are good for Flash • Flash Results

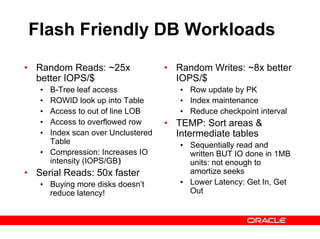

- 17. Flash Friendly DB Workloads • Random Reads: ~25x better IOPS/$ • B-Tree leaf access • ROWID look up into Table • Access to out of line LOB • Access to overflowed row • Index scan over Unclustered Table • Compression: Increases IO intensity (IOPS/GB) • Serial Reads: 50x faster • Buying more disks doesn’t reduce latency! • Random Writes: ~8x better IOPS/$ • Row update by PK • Index maintenance • Reduce checkpoint interval • TEMP: Sort areas & Intermediate tables • Sequentially read and written BUT IO done in 1MB units: not enough to amortize seeks • Lower Latency: Get In, Get Out

- 18. Not as Cost Effective on Flash • Redo Log Files • Sequentially read and written AND commit latency already handled by NVRAM in controller • Undo Table space • Sequentially written, randomly read by FlashBack. But reads are for recently written data which is likely to still be in the buffer cache • Large Table Scans • Buffer pools with lots of writes • Low latency reads followed by updates can fill up pool with dirty pages, which takes a long time to drain because Flash devices write 2-4x much slower than they read • Can cause “Free Buffer Waits” for readers

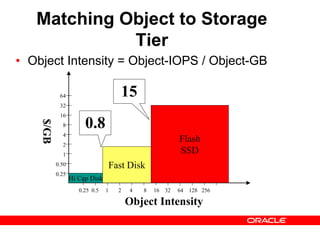

- 19. Matching Object to Storage Tier • Object Intensity = Object-IOPS / Object-GB Object Intensity $/GB 0.25 0.5 1 2 4 8 16 32 64 128 256 64 32 16 8 4 2 1 0.50 0.25 Hi Cap Disk Fast Disk Flash SSD 0.8 15

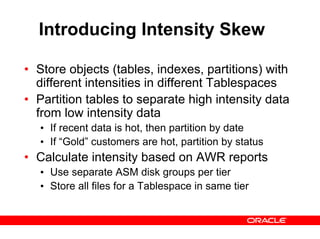

- 20. Introducing Intensity Skew • Store objects (tables, indexes, partitions) with different intensities in different Tablespaces • Partition tables to separate high intensity data from low intensity data • If recent data is hot, then partition by date • If “Gold” customers are hot, partition by status • Calculate intensity based on AWR reports • Use separate ASM disk groups per tier • Store all files for a Tablespace in same tier

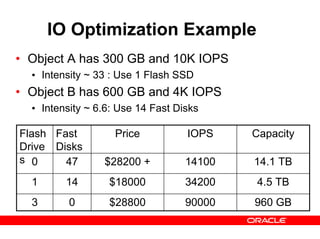

- 21. IO Optimization Example • Object A has 300 GB and 10K IOPS • Intensity ~ 33 : Use 1 Flash SSD • Object B has 600 GB and 4K IOPS • Intensity ~ 6.6: Use 14 Fast Disks Flash Drive s Fast Disks Price IOPS 0 47 $28200 + 14100 14.1 TB 34200 90000 $18000 $28800 Capacity 1 14 4.5 TB 3 0 960 GB

- 22. Matching IO Intensity to Storage Tier • Object Intensity = Object-IOPS / Object-GB • Match Object Intensity with Storage Tier • COST[tier] = MAX(Object IOPS * $/IOPS[tier], Object-GB * $/GB[tier]) • Tier Intensity[tier] = $/GB[tier] / $/IOPS[tier] • If Object-Intensity > Tier-Intensity then cost of object in this tier is IOPS bound • Otherwise cost of object in this tier is Capacity bound • Optimize: • If cost is IOPS bound, compare with lower (cheaper IOPS) tier • if cost is Capacity bound, compare with upper (cheaper capacity) tier

- 23. Tier Intensity Cut Offs • Tier Intensity = $/GB / $/IOPS • Hi Cap Disk Intensity = ($250 / 1000GB) / ($250 / 100 IOPS) = 1/10 • Fast Disk Intensity = ($600 / 300GB) / ($600 / 300 IOPS) = 1 • Flash Intensity = ($2400 / 80GB) / ($2400 / 30K IOPS) = 375 • If Object Intensity is > 375: Choose Flash • If Object Intensity is between 1 and 375 • Break even when Intensity is $/GB[Flash] / $/IOPS[Fast-Disk] = ($2400 / 80GB) / ($600 / 300 IOPS) = 15 • If Object Intensity is between 1/10 and 1: • Break even when Intensity is $/GB[Fast-Disk] / $/IOPS[HC-Disk] = ($600 / 300 GB) / ($250 / 100 IOPS) = 0.8 • If Object Intensity is < 1/10: Choose High Capacity Disk

- 24. Outline • The Flash Promises • Flash Technology • Flash Products • DB Workloads that are good for Flash • Flash Results • Storage Microbenchmarks • Oracle Microbenchmarks • OLTP Performance

- 25. • Measurements were done using the fio tool (http://freshmeat.net/projects/fio/) • We compare: • 6 disks in 1 RAID-5 LUN, 15k rpm, NVRAM write buffer in SAS controller • 178 disks in 28 (10x7+18x6) RAID-5 LUNs, 15k rpm, NVRAM write buffer in SAS controller • 1 Intel X25-E Extreme SATA 32GB SSD (pre-production), NVRAM write buffer in SAS controller disabled, External enclosure • 1 FusionIO 160 GB PCIe card formatted as 80 GB (to reduce write amplification) Storage Micro-Benchmarks

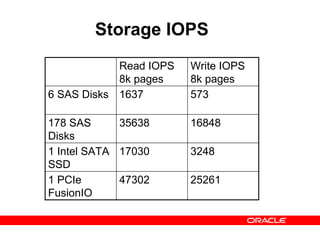

- 26. Storage IOPS Read IOPS 8k pages Write IOPS 8k pages 6 SAS Disks 1637 573 178 SAS Disks 35638 16848 1 Intel SATA SSD 17030 3248 1 PCIe FusionIO 47302 25261

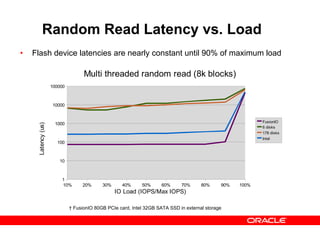

- 27. Random Read Latency vs. Load † FusionIO 80GB PCIe card, Intel 32GB SATA SSD in external storage • Flash device latencies are nearly constant until 90% of maximum load 10% 20% 30% 40% 50% 60% 70% 80% 90% 100% 1 10 100 1000 10000 100000 Multi threaded random read (8k blocks) FusionIO 6 disks 178 disks Intel IO Load (IOPS/Max IOPS) Latency(us)

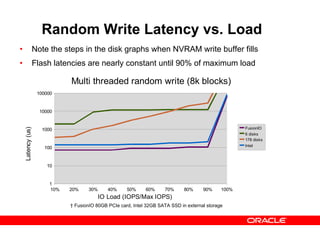

- 28. Random Write Latency vs. Load • Note the steps in the disk graphs when NVRAM write buffer fills • Flash latencies are nearly constant until 90% of maximum load 10% 20% 30% 40% 50% 60% 70% 80% 90% 100% 1 10 100 1000 10000 100000 Multi threaded random write (8k blocks) FusionIO 6 disks 178 disks Intel IO Load (IOPS/Max IOPS) Latency(us) † FusionIO 80GB PCIe card, Intel 32GB SATA SSD in external storage

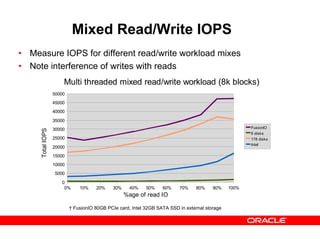

- 29. Mixed Read/Write IOPS • Measure IOPS for different read/write workload mixes • Note interference of writes with reads † FusionIO 80GB PCIe card, Intel 32GB SATA SSD in external storage 0% 10% 20% 30% 40% 50% 60% 70% 80% 90% 100% 0 5000 10000 15000 20000 25000 30000 35000 40000 45000 50000 Multi threaded mixed read/write workload (8k blocks) FusionIO 6 disks 178 disks Intel %age of read IO TotalIOPS

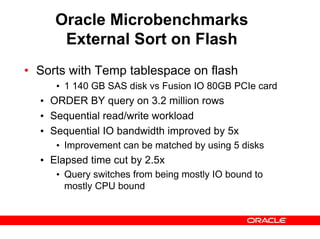

- 30. Oracle Microbenchmarks External Sort on Flash • Sorts with Temp tablespace on flash • 1 140 GB SAS disk vs Fusion IO 80GB PCIe card • ORDER BY query on 3.2 million rows • Sequential read/write workload • Sequential IO bandwidth improved by 5x • Improvement can be matched by using 5 disks • Elapsed time cut by 2.5x • Query switches from being mostly IO bound to mostly CPU bound

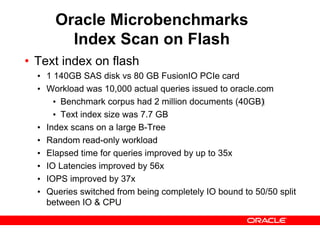

- 31. Oracle Microbenchmarks Index Scan on Flash • Text index on flash • 1 140GB SAS disk vs 80 GB FusionIO PCIe card • Workload was 10,000 actual queries issued to oracle.com • Benchmark corpus had 2 million documents (40GB) • Text index size was 7.7 GB • Index scans on a large B-Tree • Random read-only workload • Elapsed time for queries improved by up to 35x • IO Latencies improved by 56x • IOPS improved by 37x • Queries switched from being completely IO bound to 50/50 split between IO & CPU

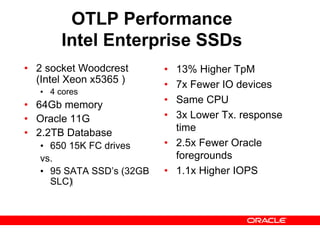

- 32. OTLP Performance Intel Enterprise SSDs • 2 socket Woodcrest (Intel Xeon x5365 ) • 4 cores • 64Gb memory • Oracle 11G • 2.2TB Database • 650 15K FC drives vs. • 95 SATA SSD’s (32GB SLC) • 13% Higher TpM • 7x Fewer IO devices • Same CPU • 3x Lower Tx. response time • 2.5x Fewer Oracle foregrounds • 1.1x Higher IOPS

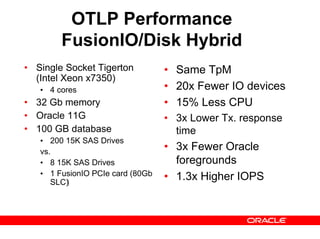

- 33. OTLP Performance FusionIO/Disk Hybrid • Single Socket Tigerton (Intel Xeon x7350) • 4 cores • 32 Gb memory • Oracle 11G • 100 GB database • 200 15K SAS Drives vs. • 8 15K SAS Drives • 1 FusionIO PCIe card (80Gb SLC) • Same TpM • 20x Fewer IO devices • 15% Less CPU • 3x Lower Tx. response time • 3x Fewer Oracle foregrounds • 1.3x Higher IOPS

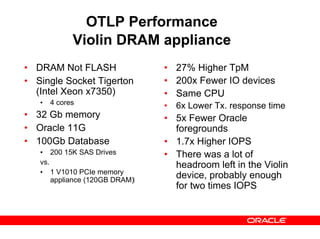

- 34. OTLP Performance Violin DRAM appliance • DRAM Not FLASH • Single Socket Tigerton (Intel Xeon x7350) • 4 cores • 32 Gb memory • Oracle 11G • 100Gb Database • 200 15K SAS Drives vs. • 1 V1010 PCIe memory appliance (120GB DRAM) • 27% Higher TpM • 200x Fewer IO devices • Same CPU • 6x Lower Tx. response time • 5x Fewer Oracle foregrounds • 1.7x Higher IOPS • There was a lot of headroom left in the Violin device, probably enough for two times IOPS

- 35. What can Flash Storage do for you? • Reduce Transaction Latency • Improve customer satisfaction • Remove performance bottlenecks caused by serialized reads • Save money/power/space by storing High Intensity objects on fewer Flash devices