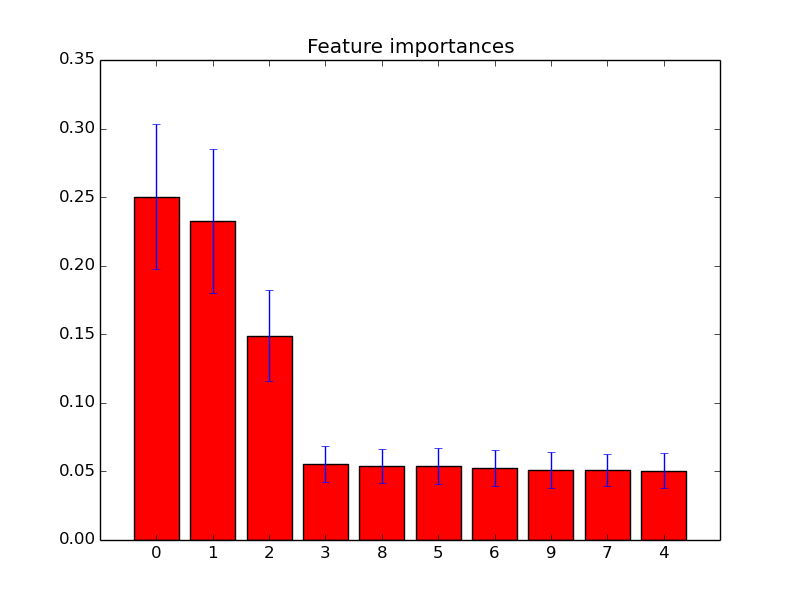

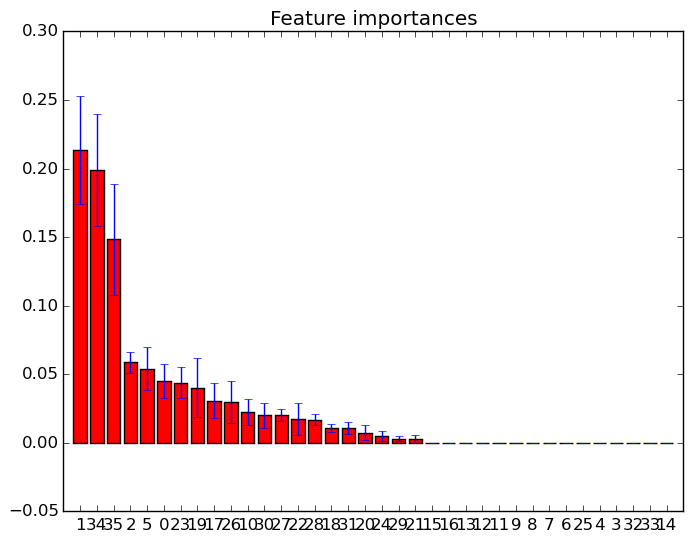

You can simply use the feature_importances_ attribute to select the features with the highest importance score. So for example you could use the following function to select the K best features according to importance.

def selectKImportance(model, X, k=5): return X[:,model.feature_importances_.argsort()[::-1][:k]]

Or if you're using a pipeline the following class

class ImportanceSelect(BaseEstimator, TransformerMixin): def __init__(self, model, n=1): self.model = model self.n = n def fit(self, *args, **kwargs): self.model.fit(*args, **kwargs) return self def transform(self, X): return X[:,self.model.feature_importances_.argsort()[::-1][:self.n]]

So for example:

>>> from sklearn.datasets import load_iris >>> from sklearn.ensemble import RandomForestClassifier >>> iris = load_iris() >>> X = iris.data >>> y = iris.target >>> >>> model = RandomForestClassifier() >>> model.fit(X,y) RandomForestClassifier(bootstrap=True, class_weight=None, criterion='gini', max_depth=None, max_features='auto', max_leaf_nodes=None, min_samples_leaf=1, min_samples_split=2, min_weight_fraction_leaf=0.0, n_estimators=10, n_jobs=1, oob_score=False, random_state=None, verbose=0, warm_start=False) >>> >>> newX = selectKImportance(model,X,2) >>> newX.shape (150, 2) >>> X.shape (150, 4)

And clearly if you wanted to selected based on some other criteria than "top k features" then you can just adjust the functions accordingly.