Researchers at the Massachusetts Institute of Technology have developed a device that uses wireless signals to detect silhouettes of people who aren't within sight — even on the other side of a wall.

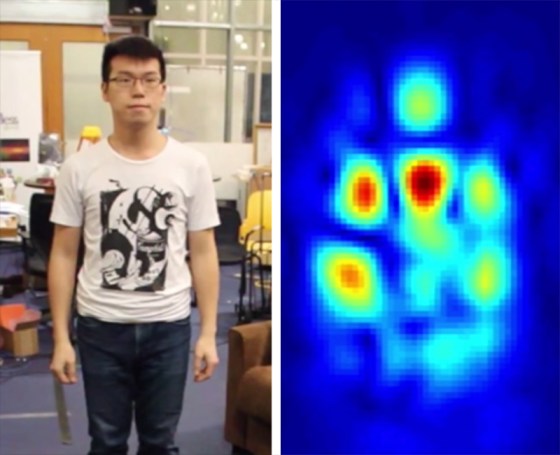

The machine sends wireless signals that bounce off a person and builds an image of the person that looks similar to heat-sensing imaging.

It works in a way similar to the Microsoft Kinect — a device that enables users to employ body movements and gestures to play video games and do other things.

Researchers at MIT's Computer Science and Artificial Intelligence Lab have been working on pieces of the technology for years. The team previously figured out how to track movement through a wall.

The most recent breakthrough, described in a study led by MIT student Fadel Adib, is the device's new ability to trace the movement of an entire silhouette. The technology can also trace the movement of a body part — say, a hand drawing in the air — and can distinguish up to 15 different individuals with 90 percent accuracy.

The team will present its findings at the SIGGRAPH Asia conference in November.

Read More from CNBC: MIT Triumphs in New World University Rankings

The applications are far-ranging. If the technology can be improved, it would allow people to interact with devices in their homes just by making gestures, without being in sight of a sensor, or without having to wear a sensor on their hands. This would further enable the "pervasive computing" (also called ubiquitous computing) concept, which holds that people will be able to interact with sensor-enabled "smart" devices and computers all around them.

One application could be in filmmaking — the technology could conceivably allow performers doing special effects sequences to shed the sensor-covered suits currently in use.

The researchers said their device could be used by firefighters to determine if a person is stuck in a burning building, or if someone has been in an accident. The MIT group has already spun off another device called Emerald, which is designed to help family and caregivers monitor a senior's home for falls or accidents.

"We're working to turn this technology into an in-home device that can call 911 if it detects that a family member has fallen unconscious," said researcher Dina Katabi, director of the MIT Center for Wireless Networks and Mobile Computing, in a statement released along with the report. "You could also imagine it being used to operate your lights and TVs, or to adjust your heating by monitoring where you are in the house."

The researchers also developed their own wireless signal for the device, which uses far less power than Wi-Fi or smartphones, and will not interfere with those signals.

Significant limitations

Of course, the technology also could be used by government and law enforcement for surveillance and spying, and one could imagine criminals using such technology to determine whether a home or building is empty.

The invention still has limitations. First, the resolution of the image is still relatively low — it will only pick up a coarse silhouette of a person or object, not the fine detail, such as fingers on the hand.

Read More from CNBC: Robotic Hand from MIT Can Recognize Objects by Feel

Second, the device works by taking a series of images of body parts, which it then compiles to create a full image of the person. Significantly, therefore, it needs a subject walking toward the device in order to get the series of images it needs to compile a full silhouette.

However, the device can distinguish several people from one another by taking body shape and height information to compile what the researchers call "silhouette fingerprints." This would allow several people, such as a large family or different employees of a business, to control the device.

The study said the team believes the device's limitations "can be addressed as our understanding of wireless reflections in the context of computer graphics and vision evolves."