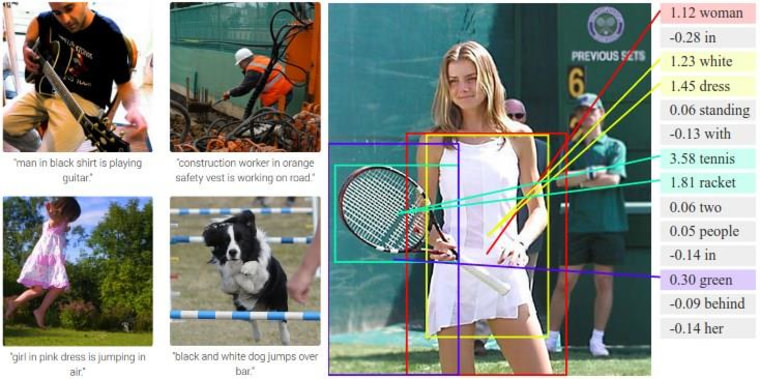

A picture may be worth a thousand words, but it can be described in far less if you know what you're looking at. New research has improved the ability of computers to do just that: recognize and categorize the contents of photos in very human-like ways. Teams at Dartmouth and Stanford Universities as well as Google have been exploring the field, and the results are impressive. Stanford and Google's systems produce natural-language descriptions of entire scenes, not just single objects — "woman in white dress plays tennis," for instance, or "two dogs run in a park." Dartmouth's research focuses on the benefits of making this information searchable. By having its artificial-intelligence software analyze and search the contents of images as well as text, search precision was improved by 30 percent.

The systems are still capable of error, however, and must be "trained" extensively to recognize certain objects and actions. If it had never seen a tennis racket, for instance, it couldn't guess what it is. For now, computers can't understand scenes and images, but to identify pieces for the purposes of searching and categorization, they don't need to.

IN-DEPTH

- New Technique Identifies Human Actions in Videos

- Google Poaches Oxford Brains for Artificial Intelligence Research

- Microsoft's 'Project Adam' Ups the Ante in Artificial Intelligence