MCP! It’s the new buzzword in the AI world. So, I thought — why not be a part of this buzz myself? That’s why I wrote this blog on using the MCP server with Semantic Kernel and Azure AI Foundry.

Let’s start by understanding: What is MCP? There are many blogs and videos that helped me grasp the MCP concept, and I’ll drop those links at the end. But for me, the simplest way to understand MCP was this: just like REST defined a standardized way to interact with Web APIs, Model Context Protocol (MCP) by Anthropic provides a standardized way for LLMs to interact with external services.

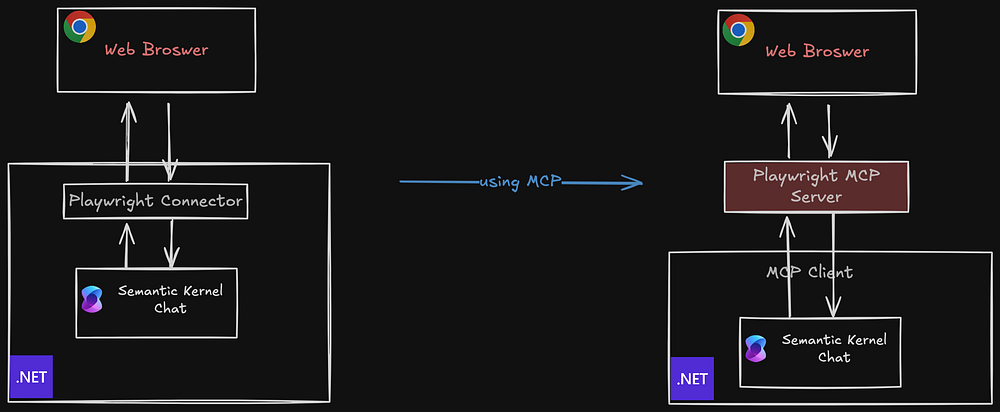

Whenever I develop an AI app and want to add a new tool or action to my AI agent, I usually need to implement a custom integration. For example, if I want my AI app to connect with AI Search, I have to define a connector to interact with the vector store in AI Search. Now, if I want to add web search capabilities, I need yet another connector to handle that.

AI frameworks like LangChain, Semantic Kernel, AutoGen, etc., do offer some out-of-the-box capabilities and common connectors — but they don’t always scale well across all applications.

With MCP, I no longer need to deal with those complexities. The MCP Server developers have already created these connectors — and provided a standardized way to access them. All I have to do is connect to these MCP services from my MCP client (which could be Claude Desktop App, Cursor, Chainlit, etc.) and do tool calling without worrying about integration details. Isn’t that cool?

Now let’s dive into a use case. I want to build an LLM app in .NET that reads that opens news website, click on first link and summarize the content. I created a similar agent using AutoGen in a previous blog — you can find more details there.

Step 1: Setup Semantic Kernel

varbuilder= Kernel.CreateBuilder(); builder.AddAzureOpenAIChatCompletion("GPT4ov1", "https://<AzureOpenAI>.openai.azure.com", "<REPLACE_WITH_KEY>>"); Kernelkernel= builder.Build();Step 2: Install official c# MCP in your .NET App and Setup MCP Client

publicstaticasync Task<IMcpClient> GetMCPClientForPlaywright() { McpClientOptions options = new() { ClientInfo = new() { Name = "Playwright", Version = "1.0.0" } }; var config = new McpServerConfig { Id = "Playwright", Name = "Playwright", TransportType = "stdio", TransportOptions = new Dictionary<string, string> { ["command"] = "npx", ["arguments"] = "-y @playwright/mcp@latest", } }; var mcpClient = await McpClientFactory.CreateAsync( config, options ); return mcpClient; }You can verify your client is setup correctly by printing out avilabale tools from server

var mcpClient = await GetMCPClientForPlaywright(); awaitforeach (var tool in mcpClient.ListToolsAsync()) { Console.WriteLine($"{tool.Name} ({tool.Description})"); }Step 3: Convert MCP Functions to KernelFunctions

Semantic Kernel tools or plugins are wrapped in KernelFunctions, so as an extra step when using MCP, we need to convert those functions into KernelFunctions. There’s an extension provided by SK for this, but it doesn’t work with the latest version of .NET MCP (at least, not when I tried it). So, I extended it to make it work with the latest version. I’ll share the code in my code reference.

var KernelFunctions = await mcpClient.MapToFunctionsAsync(); kernel.Plugins.AddFromFunctions("Browser", KernelFunctions);[Update — 14th April 2025] :

Semantic Kernel now officially supports MCP, and you can use the out-of-the-box extension to convert MCP Tools into Kernel Functions.

var tools = await mcpClient.ListToolsAsync(); kernel.Plugins.AddFromFunctions("Browser", tools.Select(aiFunction => aiFunction.AsKernelFunction()));Step 4: Send your first message

// Enable automatic function callingvar executionSettings = new OpenAIPromptExecutionSettings { Temperature = 0, FunctionChoiceBehavior = FunctionChoiceBehavior.Auto() }; // promptvar prompt = "Summarize AI news for me related to MCP on bing news. Open first link and summarize content"; var result = await kernel.InvokePromptAsync(prompt, new(executionSettings)).ConfigureAwait(false); Console.WriteLine($"\n\n{prompt}\n{result}");Result:

Conclusion:

MCP is truly revolutionizing how we integrate external services with large language models. With its seamless interface and growing adoption, it’s not hard to imagine a future where every major service offers an MCP-compatible layer, unlocking powerful and intuitive AI interactions. Building AI apps has never been this accessible.

In my next blog, I’ll take it a step further and show you how to build your own MCP server from scratch. Stay tuned — exciting things ahead!

Happy learning, and cheers! 🥂🚀

References:

- modelcontextprotocol.io

- https://github.com/microsoft/playwright-mcp

- https://github.com/microsoft/semantic-kernel/tree/7a19ae350eaff5746ace52d2c894a9975d82ba59/dotnet/samples/Demos/ModelContextProtocol

- As promised earlier, here are sources I used to understand MCP: https://blog.dailydoseofds.com/p/visual-guide-to-model-context-protocol, https://www.youtube.com/watch?v=7j_NE6Pjv-E&t=875s, https://norahsakal.com/blog/mcp-vs-api-model-context-protocol-explained/

0 comments

Be the first to start the discussion.